Does SEO use machine learning?

In this post, I’ll walk through the analysis of Google Search Console data combined with a machine learning clustering technique to provide an indication of what pages can be optimized to improve the organic traffic of a company website. I will also highlight the lessons I learned while using machine learning for an SEO task.

Interestingly, website owners when I propose to use their data are usually very relieved that AI can take care of the mundane, repetitive SEO work like analyzing GSC data; this allows the clients of our SEO management service and our own team, to focus on more complex, value-adding work such as content writing, content enrichment, and monetization.

How does machine learning work?

This experiment is designed for anyone: no specific coding skill is required. A good grip on Google Sheets is more than enough to get you started in the data mining of your website’s GSC data.

We will use Orange, an open-source tool built on top of Python, for data mining and analysis that uses a visual programming front-end (a graphical user interface that lets you do what a developer would do using a Jupyter notebook environment and Python, yay!).

You can install Orange from Anaconda, a popular Python data science platform, or simply download it and install it from their website. We will also use data from a web crawler to extract information about the length of the title and the length of the meta description. This can be done using a WooRank account, Sitebulb or any other web crawler of your choosing.

What is machine learning in SEO?

Dealing with machine learning is indeed a paradigm shift. The basic idea is that we provide highly curated data to a machine and the machine will learn from this data, it will program itself and it will help us in the analysis by either grouping data points, making predictions or extracting relevant patterns from our dataset. Choosing the data points and curating the dataset, in machine learning, is as strategic as writing the computer program in traditional computer science. By deciding the type of data you will feed the machine you are transferring the knowledge required to train the machine. To do so, you need the so-called domain experts and when I started with this experiment I came across a tweet from Bill Slawski that indicated to me the importance of comparing search impressions to clicks on a page as the most valuable piece of data from the Google Search Console.

A3 One of my favorite GSC Data points is comparing search impressions to click on a page, if they vary widely is a sign that rewriting titles or snippets would be a good idea – less of a sign of success of an ongoing campaign, but helpful to know about. #SEOChat

— Bill Slawski ⚓ (@bill_slawski) January 10, 2019

I also spotted another valuable conversation on the topic between Aleyda Solis and Cyrus Shepard.

+1000 ?

— Aleyda Solis ??? (@aleyda) January 23, 2019

I especially enjoy revising/reoptimizing titles and meta descriptions from time to time, starting with those pages that are now in the top 3-5 positions but very low CTR. https://t.co/RElxn7jSiC

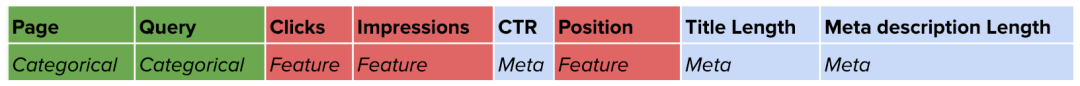

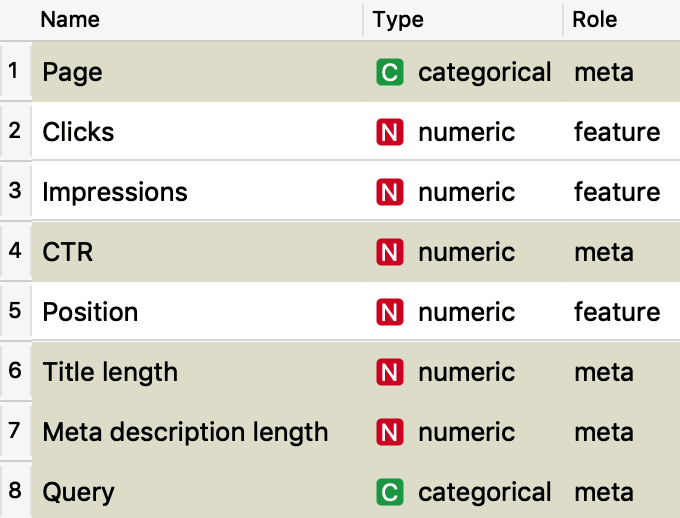

By reading this I decided to compile a dataset composed of the following attributes. The first 6 coming from GSC and the other 2 coming out of the crawling of the pages.

The overall idea, as explained by Bill Slawski, is to rewrite the title and the meta description of pages that receive a good number of impression and a low number of clicks.

“Willing to know more about what data is provided by Google Search Console? Read it all here on the WooRank’s Blog.”

As we learned from Aleyda another important aspect to winning the game is to focus only on pages that have already a strong position (between 3 and 5 she says). This is extremely important, as it will speed up the process and bring almost immediate results. Of course, the bracket might be different for smaller websites (in some cases working with pages with a position between 3 and 10 might also be valuable).

How do I get the data from Google Search Console into Google Sheet?

Luckily GSC provides fast and reliable access to your data via APIs, and you can use a Google Sheet Add On called searchanalyticsforsheets.com that automatically retrieve the data and stores it in Google Sheet without writing a line of code. It is free, super simple to use and well documented (kudos for the developing team ?).

If you are more familiar with Python you can also use this script by Stephan Solomonidis on GitHub that would do pretty much the same work with only a few lines of code.

In my dataset, I wanted to have both queries and pages in the same file. A page usually ranks for multiple intents and it is important to see what is the main query we want to optimize for.

How can I merge two datasets in one?

Aggregating data from the crawler with data from GSC can be done directly in Orange using the merge data widget that horizontally combines two datasets by using the page as a matching attribute. I used, instead, Google Sheets with a combination of ARRAYFORMULA (it will run the function on an entire column) and VLOOKUP (this does the actual match and brings both title length and meta description length in the same table).

=ARRAYFORMULA(VLOOKUP(A2:A,crawl_html!A6:C501,{1,2},false))

ARRAYFORMULA(VLOOKUP(search_key,range,index,[is_sorted]))

|

How to prepare data for machine learning

Data curation is essential to obtain any valid results with artificial intelligence. Data preparation also is different for each algorithm. Each machine learning algorithm requires data to be formatted in a very specific way and before finding the right combination of columns and yield useful insights I did several iterations. Missing data and wrong formatting (when migrating data in Orange in our case) have been issues to deal with.

Generally speaking for missing data there are two options, either remove the data points or fill it up with average values (there are a lot more options to consider but this is basically what I did in the various iterations). Formatting is quite straightforward, we simply want Orange to properly see each informative feature as a number (and not as a string).

How to create a dataset for machine learning

The dataset we’re working with is made of 15784 rows each one containing a specific combination of page and query. We have 3 informative features in the dataset (clicks, impression, and position) and 5 labels (page, query, CTR, title and meta description length). Page and query are categorical labels (we can group the data by the same query or by the same page). CTR is a formula that calculates clicks/impression * 100 and for this reason, is not an informative feature. Labels or calculated values are not informative: they don’t help the algorithm in clustering the data. At the same time, they are extremely useful to help us understand and read the patterns in the data.

What is k-Means Clustering algorithm in machine learning?

When looking at thousands of combinations of queries across hundreds of web pages selecting the pages that have the highest potential in terms of SEO optimization is an intimidating task. This is particularly true when you have never done such analysis before or when you are approaching a website that you don’t know (as we do – in most cases – when we start a project with one new client that is using our technology).

We want to be able to group the combination of pages that can be more easily improved by updating the title and the snippet that describes the article. We also want to learn something new from the data that we collected to improve the overall quality of the content that we will produce in the future. Clustering is a good approach as it will break down the opportunities in a limited number of groups and it will unveil the underlying patterns in the data.

A cluster refers to a collection of data points aggregated together by a certain degree of similarity.

How does k-Means Clustering work?

K-Means clustering is one of the simplest and most popular unsupervised machine learning algorithms. It will make inferences using only input features (data points like the numbers of impressions or the number of clicks) without requiring any labelled outcome.

K-Means will average the data by identifying a centroid for each group and by grouping all records in a limited number of clusters. A centroid is the imaginary centre of each cluster.

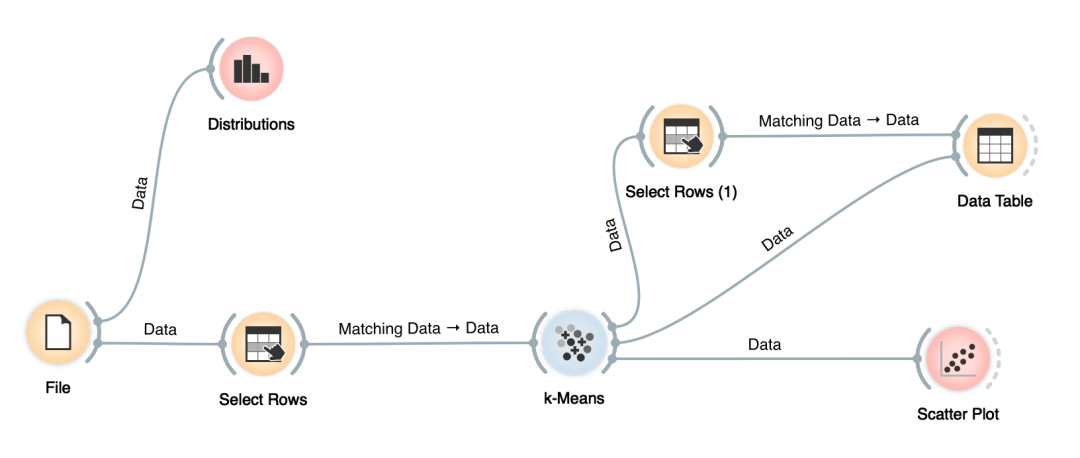

How do I create a workflow in Orange?

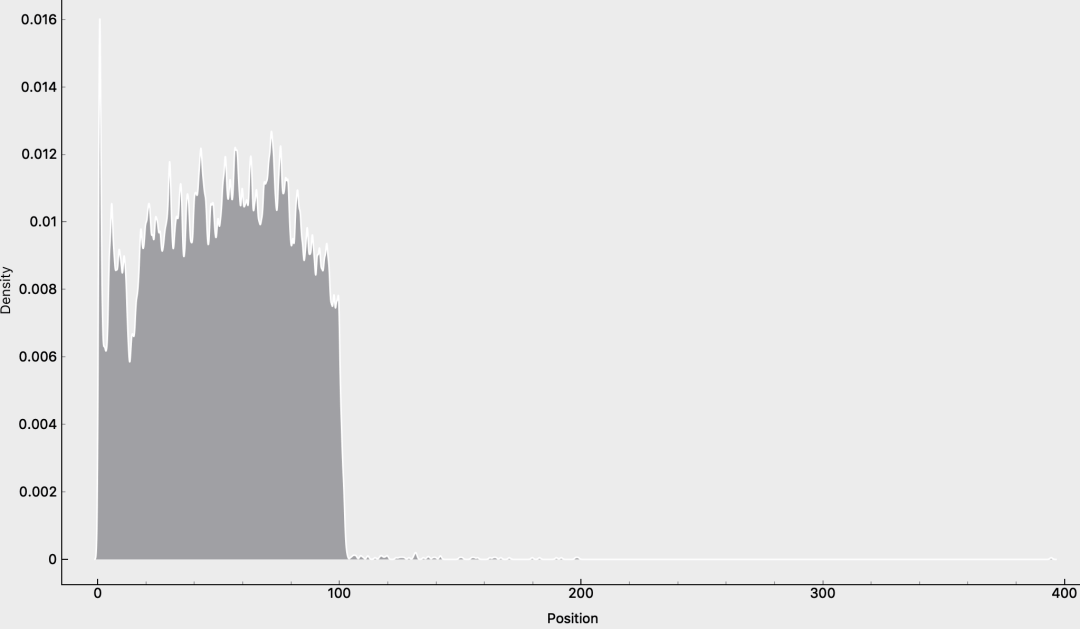

Here is how the flow looks like in Orange. We’re importing the CSV data that we have created using the File widget, we’re quickly analyzing the data using the Distribution Widget. We have the k-Means Widget at the centre of the workflow that receives data from the Select Rows Widget (this is a simple filter to work only on records that are positioned in SERP between 3 and 10) and sends the output to a Scatter Plot that will help us visualize the clusters and understand the underlying patterns. On another end, the k-Means sends the data to a Data Table widget that will produce the final report with the list of pages we need to work on and their respective queries. Here we also use a Select Rows widget to bring in our final report only the most relevant cluster.

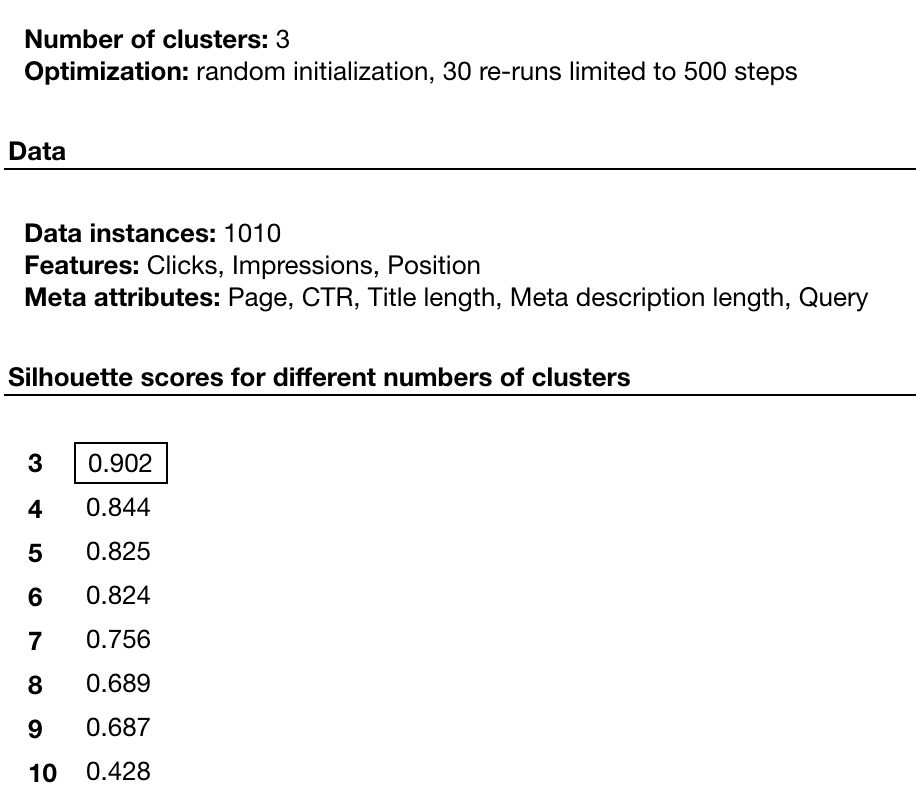

The silhouette score in k-Means helps us understand how similar each combination is to its own cluster (cohesion) compared to other clusters (separation).

The silhouette score ranges from 0 to 1 (a high value indicates that the object is well matched to its own cluster). By using this value the algorithm can define how many clusters we need (unless we specify otherwise) and the level of cohesion of each group. In our case 3 clusters represent the best way to organize our data and to prioritize our work. From the initial 15784 samples (the rows in our dataset) we have now selected 1010 instances (these are all the combinations with pages in position 3-10) that have been grouped by k-Means.

How is machine learning used in Google Search?

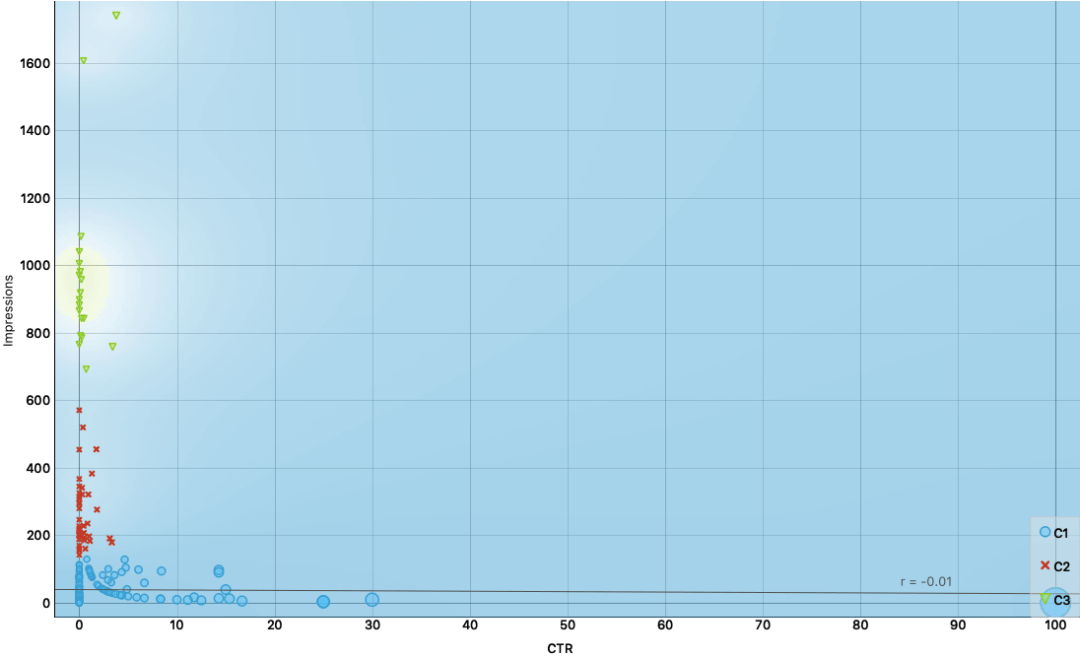

We will use Orange’s intelligent data visualization to find informative projections. In this way, we can see how the data has been clustered. The projections are a list of attribute pairs by average classification accuracy score that shows us the underlying patterns in our dataset. Here are the top 4 I have chosen to evaluate.

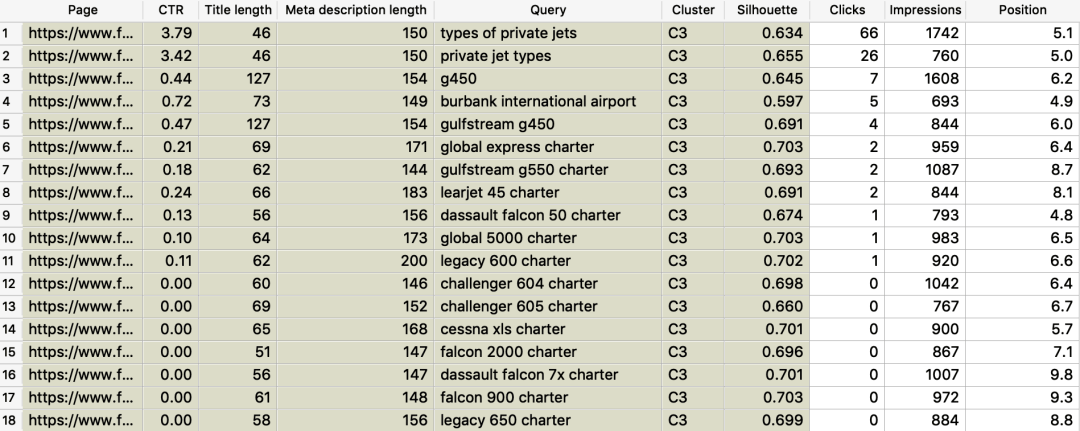

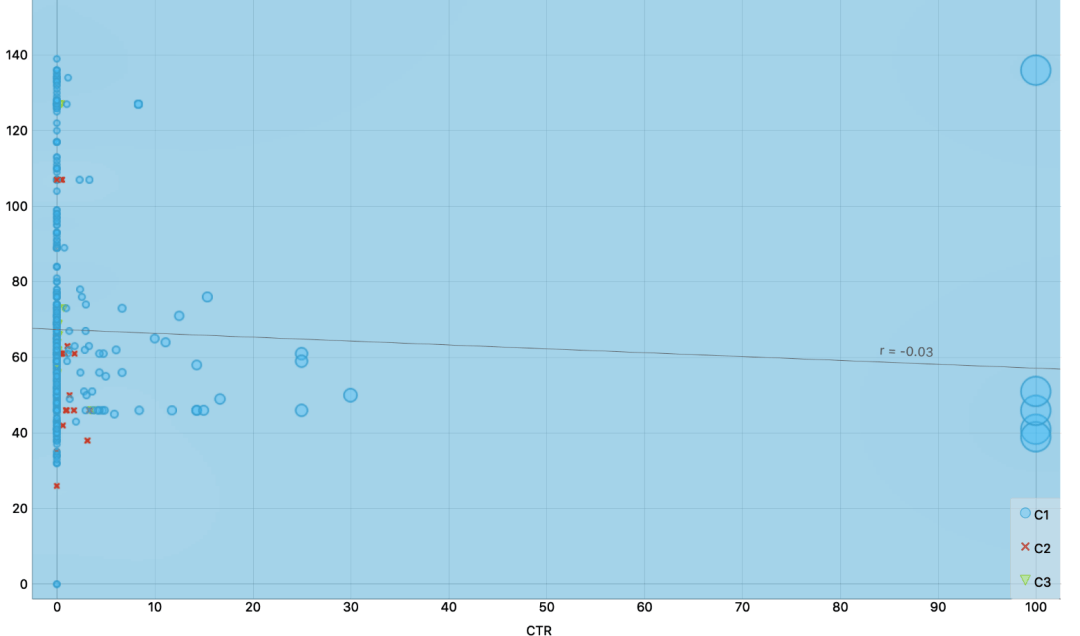

1. Focus on high impressions and low CTR and here is the list of pages to optimize

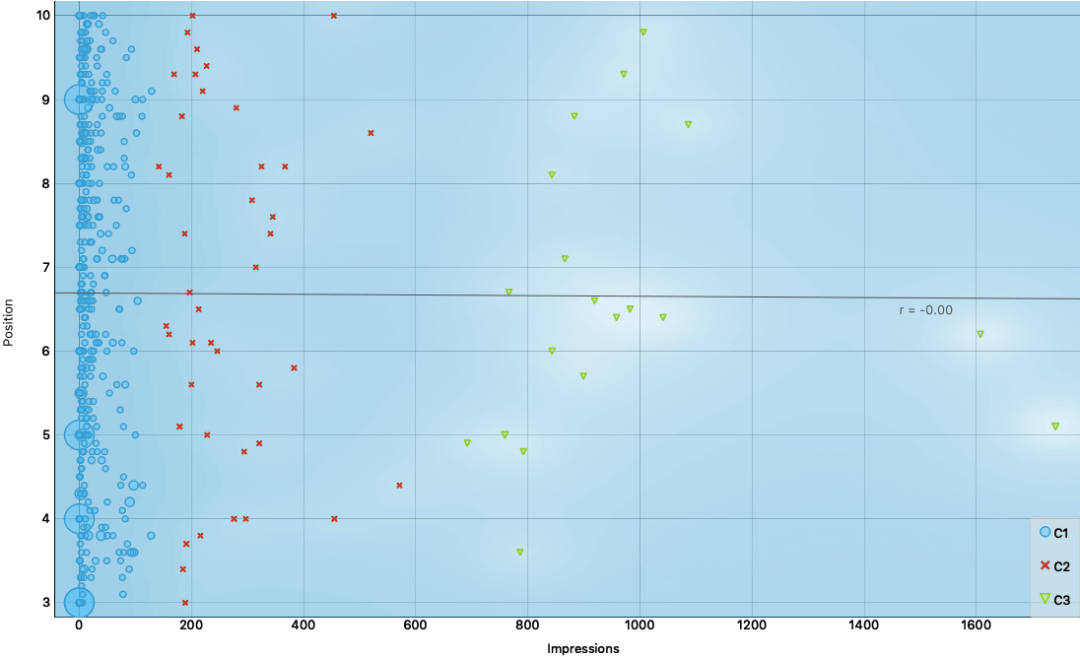

There is no point in working on cluster C1, either there are very few impressions or the CTR is already high. Where it hurts the most is on C3 and following we have the C2 cluster.

We have now a total of 56 combinations of pages and queries that really deserve our attention (C2 and C3). Out of this batch, there are 18 instances in C3 (the most relevant group to address) and this basically means working on 16 pages (2 pages are getting traffic from 2 queries each).

This is the list for our content team to optimize. New titles and improved meta descriptions will yield better results in a few weeks.

2. Positions don’t matter as much as impressions

Our three clusters are well distributed across all selected positions. We might prefer – unless there are strategic reasons to do otherwise – to improve the CTR of a page with a lower position but a strong exposure rather than improving the clicks on a higher ranking result on a low volume keyword.

3. Write titles with a length between 40 and 80 characters

Google usually displays the first 50–60 characters of a title tag. MOZ research suggests that you can expect about 90% of your titles to display properly when contained under 60 characters. From the data, we gathered we could see that, while the vast majority is working under 60 characters we can still get a healthy CTR with titles up to 78 characters and not shorter than 38 characters.

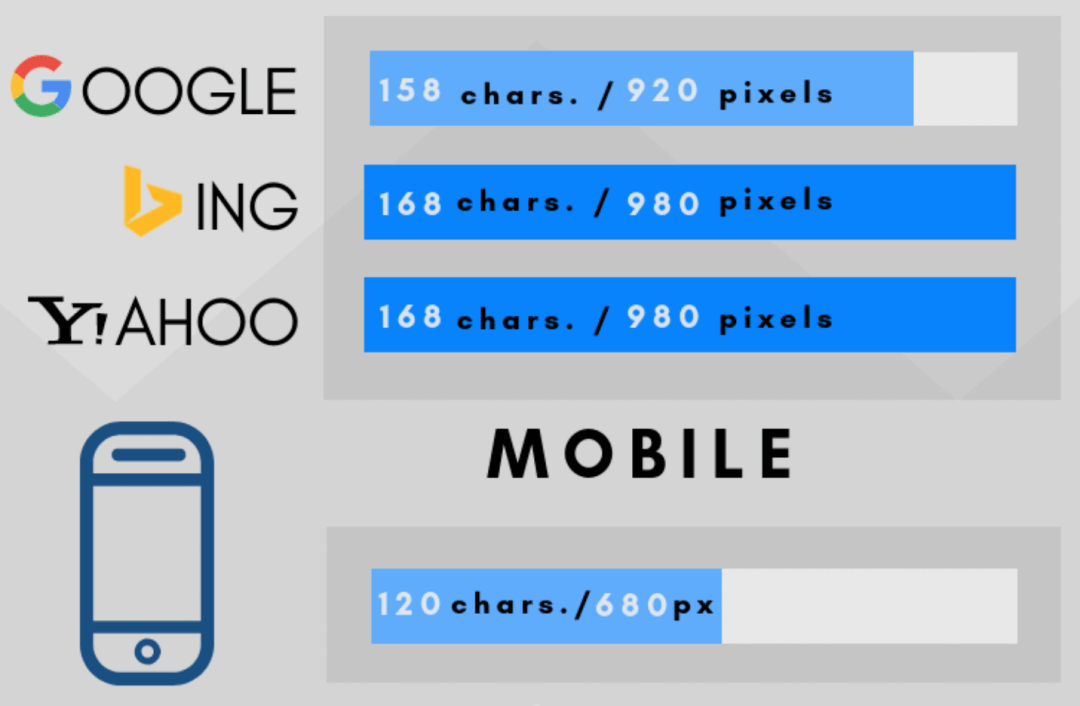

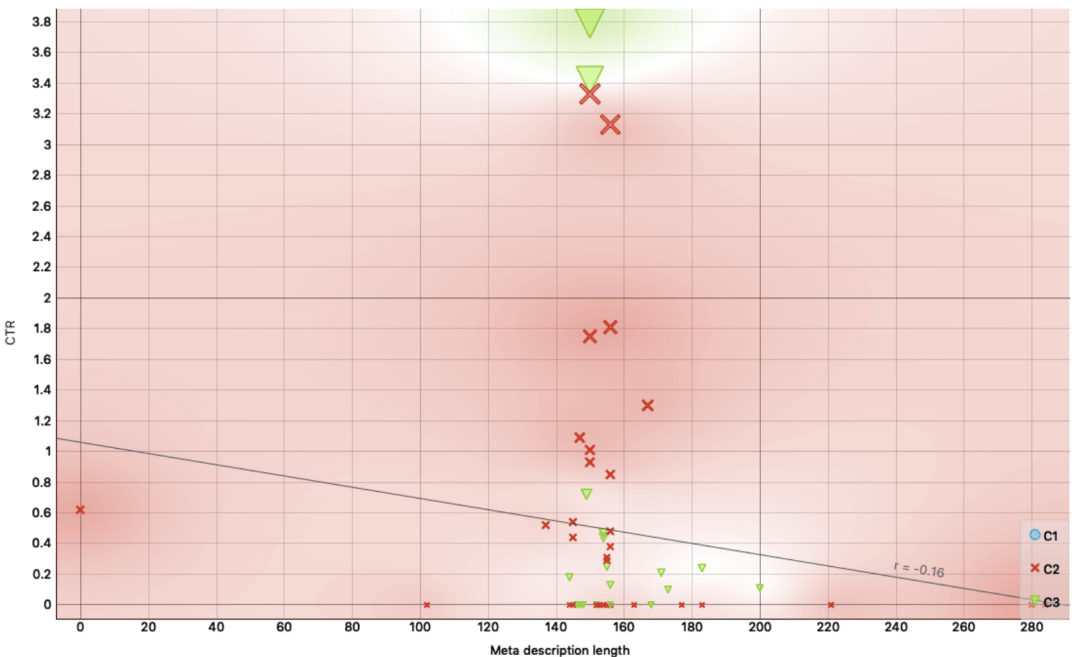

4. Write Meta Description with a length between 140 and 160 characters

At the beginning of May last year, the length of the meta description on Google has been shortened after the last update in December 2017, when the length was extended up to 290 characters. In other words, Google is still testing various lengths and if on a desktop it displays 920 pixels (158 characters) on mobile you will see up to 120 characters in most cases.

This means that the correct length is also dependent on the percentage of mobile users currently accessing the website. Once again we can ask the data what should be the preferred number of characters by looking at clusters C2 and C3. Here we can immediately see that the winning length is between 140 and 160 chars (highest CTR = bigger size of the shapes).

Make Your Website Smarter with AI-Powered SEO: just focus on your content and let the AI grow the traffic on your website!

What can I do with Machine Learning?

Machine Learning can help us across the entire SEO optimization workflow. Some areas are, though, based on my personal experience, more rewarding than others. Still, again – there is no one size fit all and, depending on the characteristics of your website, the success recipe might be different. Here is what I see most rewarding across various verticals.

- Automating Structured Data Markup

- Finding new untapped content ideas with the help of AI

- Automating Content Creation

- Creating SEO-Driven Article Outlines

- Crafting good page titles for SEO

- Generating meta descriptions that work

- Creating FAQ content on scale

Machine learning is using also to search and cluster keywords. You can find out more about keyword research using AI by seeing our last web story.

How machine learning is changing SEO?

These are really the first steps towards a future where SEOs and marketers have instant access to insights provided by machine learning that can drive a stronger and sustainable growth of web traffic without requiring a massive amount of time in sifting through spreadsheets and web metrics.

While it took a few weeks to set up the initial environment, to test the right combination of features and to share this blog post with you, now to process hundreds of thousands of combinations anyone can do it out in just a few minutes! This is also the beauty of using a tool like Orange that, after the initial setup, requires no coding skills.

We will continue to improve the methodology while working for our VIP clients, validating the results from this type of analysis and eventually improve our product to bring these results to an increasing number of people (all the clients of our semantic technology).

Keep following us and drop me a line to learn more about SEO Automation using AI!

Do you want to learn more about SEO Automation?

Check out Andrea Volpini’s presentation at BrightonSEO on SEO Automation Using GPT3 and Transformer Based Language Models. You can find them at this link.

Jeff Dean, Senior Fellow, and SVP at Google announced the introduction of Pathways: a new AI architecture multi-tasking, multi-modal, and more efficient. To find out more about Google Pathways, check out our Web Stories here.

Must Read Content

The Power of Product Knowledge Graph for E-commerce

Dive deep into the power of data for e-commerce

Why Do We Need Knowledge Graphs?

Learn what a knowledge graph brings to SEO with Teodora Petkova

Generative AI for SEO: An Overview

Use videos to increase traffic to your websites

SEO Automation in 2024

Improve the SEO of your website through Artificial Intelligence

Touch your SEO: Introducing Physical SEO

Connect a physical product to the ecosystem of data on the web