With artificial intelligence revolutionizing various industries, technologies like GPT-3 have become powerful tools in the digital landscape. However, as we move forward, more capable language models such as GPT-4 or any other Open Source LLM are setting new benchmarks for what AI can achieve. This evolution raises a critical question: How can online businesses gain a competitive edge when advanced language generation tools are widely accessible?

This article delves into how e-commerce stores can leverage the latest AI technology, including GPT-3 and beyond, to automate large-scale content production without compromising quality. We’ll explore the use of natural language generation for creating product descriptions and discuss the introduction of more advanced models, their benefits, and the challenges they present.

GPT models: What You Need to Know

- What are GPT-3, GPT-3.5 and GPT-4?

- What can a GPT model do?

- Is a GPT model a trustworthy source?

- But what about the correctness of product descriptions?

GPT-3, GPT-3.5 and GPT-4 for e-commerce

- Is GPT model a competitive advantage for businesses?

- What can a GPT model achieve for e-commerce?

- Can a GPT model generate good product descriptions?

Beyond GPT-3: Exploring GPT-4 and Other Open Source LLM

- The Advancements Made with Newer Models

- Improved Capabilities for E-commerce

- Maintaining a Competitive Edge

- The Impact on Content Generation

Generate product descriptions with GPT-3

- Test 1: AI-generated descriptions with the pre-trained model (without fine-tuning)

- Test 2: AI-generated product description using the fine-tuned model

The seductive path of good enough when generating completions

- More data isn’t always better

- Maintaining the right tone of voice

- Never underestimate the power of prompt design

Content generation isn’t the final destination

Ready to Elevate Your E-commerce Content?

Discover how WordLift’s content generation service can transform your product descriptions and boost your online presence. Leverage the power of the latest AI technologies, including GPT-4, to create compelling, high-quality content at scale. Explore our content generation solutions and stay ahead in the competitive e-commerce landscape.

AI-generated content

The process of content writing is an activity that almost every e-commerce store and blog owner must constantly invest in to produce fresh and high-value content. Content can take various forms such as blog articles, product descriptions, knowledge bases, landing pages, among many other formats. Producing good content involves planning and a lot of effort.

A digital threat and an opportunity

The thought that generative AI can write like humans and thus produce infinite content can be perceived either as a digital threat for some businesses or a miraculous opportunity for others. AI writing technology has made tremendous strides, especially in the past few years, drastically reducing the time required to create good content.

From good to great

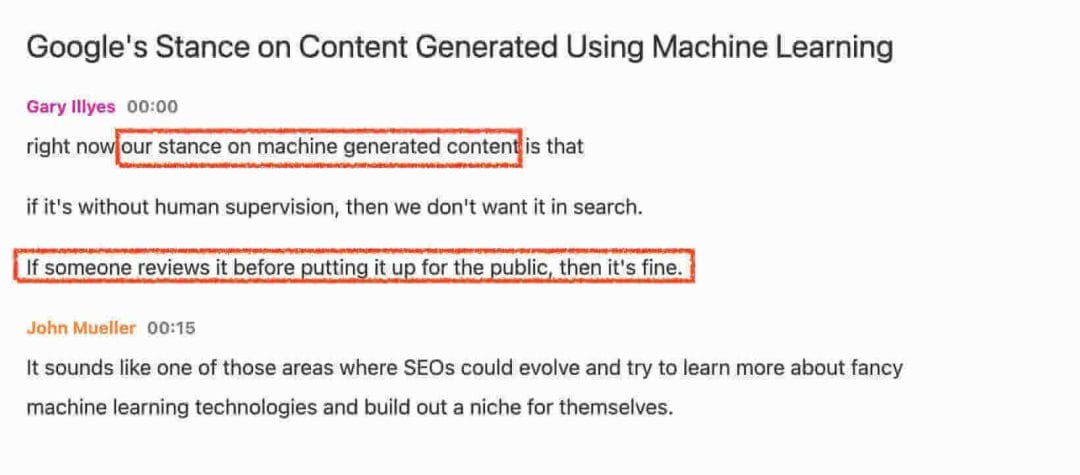

While content isn’t always enough, only a professional content writer would transform the AI-generated content from good to great. Human supervision, refinements, and validations are crucial to delivering great AI-generated content with the current technology. Human in the loop is needed and it matches Google’s stance on content generated by machine learning as Gary Illyes mentions and reported in our web story about automatically generated content in SEO.

Right now our stance on machine generated content is that if it’s without human supervision, then we don’t want it in search. If someone reviews it before putting it up for the public then it’s fine.

More capable models

Data on the rate of progress in AI shows that the technology is moving so fast. In his recent essay, Jeff Dean, SVP at Google AI, outlines the progress and the directions in the field of machine learning over the next few years. Language models are one of the five areas that are expected to have a great impact on the lives of billions of people.

The competition to produce larger machine learning models has been ongoing and, in many cases, has led to significant increases in accuracy for a wide variety of language tasks such as language generation (GPT-3), natural language understanding (T5), and multilingual neural language translation (M4). The graph below, from the Microsoft research blog, shows the exponential growth in terms of the number of parameters for the state-of-the-art language models.

With these notable gains in accuracy have come new challenges. Therefore, there is a real need, now more than ever, to alleviate the complexity of these models and reduce their size. To continue to move the field forward, new breakthroughs are needed to answer the various sustainability aspects:

- From a technical perspective, the next generation of AI architectures are paving the way for general-purpose intelligent systems. Google introduced Pathways, an AI architecture that is multitasking, multi-modal and more efficient. Such architectures promote less complex AI systems and allow these systems to generalize across thousands or millions of tasks.

- Training large-scale language systems is a costly process, economically, and environmentally. Tackling the main reasons that are motivating the race towards increasing the number of parameters is the aim of Retrieval-Enhanced Transformer (RETRO), a new language model by DeepMind. The approach consists of decoupling the reasoning capabilities and the memorization in order to achieve good performance without having to significantly increase computations.

- With large-scale language models arise concerns related to their truthfulness when generating answers to questions. These concerns range from simple inaccuracies to wild hallucinations. Evaluating the truthfulness of these models and understanding the potential risks requires quantitative baselines and good measurements. In fact, not all false statements can be solved merely by scaling up. TruthfulQA is a first initiative to benchmark the truthfulness of these models towards improving them.

What’s not possible today will be possible in the near future with the rate of progress in AI. That’s why everyone and every business needs to get prepared for the adoption of AI-writing tools. If you’re interested in the topic of the rising tide of AI-generated content, The Search Singularity: How to Win in the Era of Infinite Content is a must-read.

GPT models: What You Need to Know

What are GPT-3, GPT-3.5 and GPT-4?

GPT-3, GPT-3.5, and GPT-4 are iterations of the Generative Pre-trained Transformer models developed by OpenAI, each representing significant advancements in artificial intelligence and natural language processing. GPT-3, introduced in June 2020, set a new standard for AI’s ability to understand and generate human-like text, making it a powerful tool for various applications, from content creation to customer service automation. GPT-3.5, an interim update, further refined its predecessor’s capabilities, offering improvements in understanding context and generating more coherent and contextually relevant responses. GPT-4, the latest in the series, is expected to push the boundaries even further with enhanced learning algorithms and a larger dataset for training, resulting in even more sophisticated and nuanced language generation. Together, these models represent the cutting edge of AI’s ability to interact with and understand human language, opening up new possibilities for automation, creativity, and communication.

What can a GPT model do?

GPT models, standing for Generative Pre-trained Transformer, are a series of artificial intelligence models developed by OpenAI that have revolutionized the field of natural language processing. These models are capable of understanding and generating human-like text based on the input they receive. They can perform a wide range of tasks, including but not limited to writing essays, composing poetry, generating code, translating languages, summarizing long documents, and even engaging in conversation. The versatility of GPT models stems from their deep learning architecture, which allows them to learn patterns and nuances in language from vast datasets. This enables them to produce outputs that are remarkably coherent and contextually relevant, making them powerful tools for content creation, customer service automation, educational purposes, and more.

Is a GPT model a trustworthy source?

GPT models, including GPT-3, GPT-3.5, and the more recent GPT-4, are advanced language models developed by OpenAI that have revolutionized how we interact with artificial intelligence. These models are trained on extensive datasets comprising a wide range of internet text, including the Common Crawl dataset, Wikipedia entries, online books, and more. Their primary function is to predict and generate text based on the input they receive, making them incredibly versatile for various applications, from writing assistance to content creation and even coding.

One of the critical strengths of GPT models is their ability to draw information from their training data to produce coherent, contextually relevant text. However, these models do not inherently verify the accuracy of the information they generate, as they cannot cite sources or confirm the factual correctness of their outputs. This has led to efforts to enhance the factual accuracy of AI-generated content. For instance, WebGPT is a prototype that aims to mimic human research methods by being trained to cite its sources, particularly in Long-Form Question Answering (LFQA) scenarios. This development represents a significant step towards creating more reliable and truthful AI systems.

But what about the correctness of product descriptions?

The general principle of citing sources could be a perfect fit for open-ended questions. But this isn’t always the case for many other use cases. For instance, generating product descriptions requires a different solution. The product descriptions must precisely describe the product and its attributes. As a matter of fact, the source is already known: it’s the dataset of the e-commerce store that’s used to fine-tune the model. Instead, the e-commerce store would need a way to validate the attributes’ completeness and accuracy in most cases.

GPT-3, GPT-3.5 and GPT-4 for e-commerce

Is a GPT model a competitive advantage for businesses?

GPT models, including GPT-3 and its successors, can revolutionize various sectors by automating and enhancing tasks that rely on natural language processing. These models developed through extensive research, come with unique limitations and challenges, mainly when applied in business contexts. Despite these challenges, integrating AI-generated content into data workflows for curation, validation, and the implementation of safeguards can provide businesses with a significant competitive edge. As technology evolves, so does the understanding and application of these models, making them increasingly valuable tools for companies looking to leverage AI for growth and innovation.

What can a GPT model achieve for e-commerce?

Generative Pre-trained Transformer (GPT) models, developed by OpenAI, have revolutionized various industries with their advanced capabilities in natural language processing. These models, including GPT-3, GPT-3.5, and the more recent GPT-4, are at the forefront of AI-driven applications, offering a wide range of functionalities through their API. The versatility of GPT models allows for innovative applications across different sectors, especially in e-commerce. Here are several ways GPT models can enhance online business operations:

- Analyzing customer feedback to extract valuable insights and summaries, making it easier for businesses to understand consumer needs and preferences.

- Utilizing semantic search to answer complex queries, providing users with more accurate and relevant search results.

- Creating sentiment analysis classifiers to gauge public opinion from social media data or customer reviews helps businesses to better understand their audience’s feelings towards their products or brands.

- Automatically generate a product taxonomy and organize e-commerce products into intuitive categories and tags for more straightforward navigation and discovery.

- Crafting detailed and engaging product descriptions, leveraging the vast knowledge base of GPT models to produce content that can improve SEO and conversion rates.

In this exploration, we will focus on generating product descriptions using GPT models. By leveraging a public dataset, we will demonstrate how businesses can employ these powerful AI tools to create compelling e-commerce product descriptions, showcasing the practical application of GPT models in enhancing online retail experiences.

Can a GPT model generate good product descriptions?

Generative Pre-trained Transformer (GPT) models, developed by OpenAI, have significantly impacted the way we generate text, offering the ability to predict subsequent words based on a given prompt. This capability extends across various versions, including GPT-3, GPT-3.5, and the latest, GPT-4, enabling the creation of coherent sentences and human-like paragraphs. While these models can generate impressive outputs, they are not immediately perfect for drafting product descriptions for online stores without further customization.

The key to tailoring the output of GPT models to specific needs lies in fine-tuning. Unlike training a model from scratch, fine-tuning involves adjusting a pre-trained model with specific examples that align with your objectives. These examples should ideally encapsulate the product’s essence, reflect the brand’s identity, and convey the desired tone of voice. Through fine-tuning, businesses can harness the power of AI to generate product descriptions that add real value, enhancing their online presence and SEO efforts. For a deeper understanding of how AI can be customized for text generation and its benefits for SEO, exploring practical examples of AI text generation can provide valuable insights.

Curious to know more about Category Pages Optimization for E-commerce websites?

Let's talk!

Beyond GPT-3: Exploring GPT-4 and Other Open Source LLM

As the digital landscape continues to evolve, so does the technology that powers it. GPT-3 has been a game-changer for many businesses, especially in e-commerce, by automating content creation at an unprecedented scale. However, the march of progress continues beyond there. With the advent of GPT-4 and other advanced language models, we’re on the cusp of a new era in artificial intelligence and content generation.

The Advancements Made with Newer Models

GPT-4 and similar models represent a significant leap forward in AI’s capabilities. These models have been trained on even larger datasets and refined algorithms, enabling them to understand and generate human-like text with greater accuracy and nuance. This evolution means that the content produced is more coherent and more aligned with the subtle tones and styles that brands strive to maintain.

Improved Capabilities for E-commerce

For e-commerce businesses, these advancements translate into even more powerful tools for automating content creation. GPT-4 can generate product descriptions, blog posts, and marketing copy that are indistinguishable from human-written content and highly optimized for SEO. This capability allows businesses to maintain a consistent and engaging online presence, which is crucial for attracting and retaining customers in a competitive digital marketplace.

Maintaining a Competitive Edge

The continuous evolution of AI models like GPT-4 underscores the importance of staying ahead in the technology curve. As these tools become more accessible, the competitive advantage lies in using AI for content generation and leveraging the most advanced models available. Businesses that adopt these newer models early can enjoy a significant edge over competitors, benefiting from more efficient content production processes and higher-quality outputs.

The Impact on Content Generation

The introduction of GPT-4 and other advanced models marks a pivotal moment in the journey of AI-driven content generation. These models promise to automate content creation tasks with unprecedented efficiency and quality, enabling businesses to scale their content strategies without sacrificing quality. As we look to the future, the role of AI in content generation will only grow, making it an essential tool for any business looking to thrive in the digital age.

In conclusion, the advancements made with GPT-4 and other advanced models are set to revolutionize how e-commerce businesses approach content creation. By staying informed about these developments and incorporating the latest technologies into their content strategies, companies can maintain a competitive edge and continue to engage their audiences effectively.

We’ve enhanced Agent WordLift (GPT version) with the capability to craft product descriptions directly from photos, utilizing Google SERP and entity analysis. While not scalable yet, this feature showcases the immense potential of our innovative approach.

Ready to Elevate Your E-commerce Content?

Discover how WordLift’s content generation can transform your product descriptions and boost your online presence. Leverage the power of the latest AI technologies, including GPT-4, to create compelling, high-quality content at scale. Explore our content generation solutions and stay ahead in the competitive e-commerce landscape.

From data to prompts

The dataset

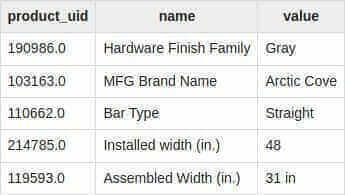

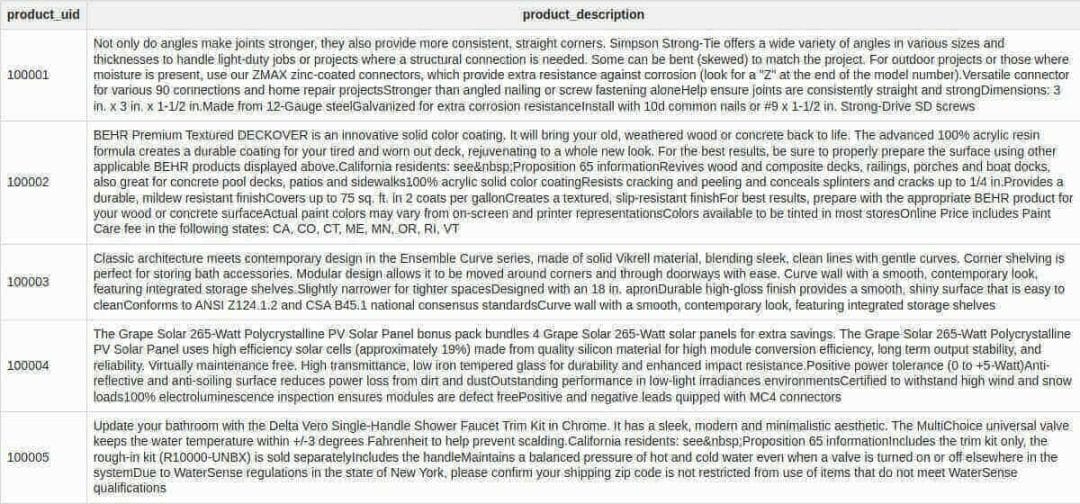

The dataset used in this work contains a list of e-commerce products. Each product can have multiple attributes and a description. The dataset can be downloaded from this Kaggle challenge: Home Depot Product Search Relevance. The challenge seeks to improve customers’ shopping experience by accurately predicting the relevance of search results.

In this work, we’re deviating from the initial aim of the challenge to adapt it to the use case of product description generation using GPT-3. Consequently, pre-processing work must be conducted to discard inapplicable data and extract valuable data.

Data preparation

As mentioned earlier, let’s go through the steps behind selecting a product and creating its associated dataset. From the online dataset, you need to download the following csv files called attributes.csv.

To help you get started, I prepared the various steps required to load the data, choose a specific product category, and extract the list of products within the chosen category with their related attributes. Find the related code in this Google Colab.

In this demo, I chose to generate descriptions for gloves, a product of the clothing category. It’s important to note that it’s possible to choose a different product from the dataset by running the same code and changing a few parameters.

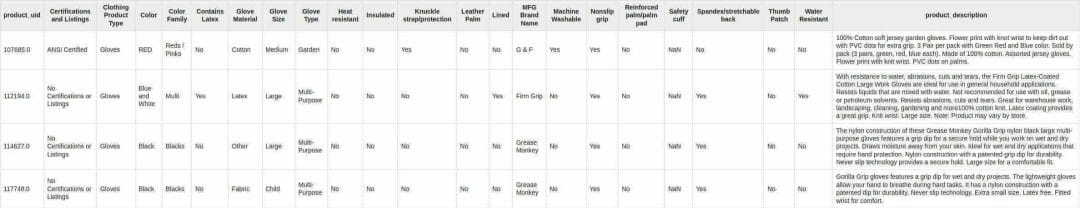

Loading the attributes returns a set of products with their corresponding characteristics and values. Please note that every product has a unique product_uid and can have one or more attributes (column name) and each attribute can have a value (column value).

The next steps consist of a number of operations to:

- clean the data

- choose a category and a specific product

- drop columns that contain high percentage of empty attribute values

- pivot the joined attributes data frame to display the complete set of attributes of a product on a single row

The following image displays the columns for the chosen product. It’s important to note that the columns, which refer to the product attributes, are specific to the product you select. In this case, these columns are related to the attributes that are used to describe gloves. Consequently, these columns won’t be the same for another product.

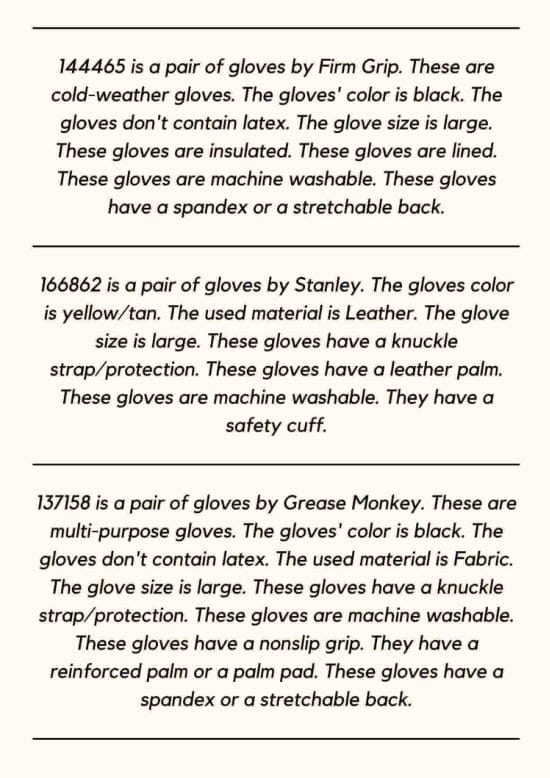

Prompt design

Similar to the prompt example that I presented earlier, the data from the previous step has to be transformed into sentences. The goal of this step is to describe each product using the available attributes. Each product will have its own prompt.

In this context, prompt design, or prompt engineering, consists of assembling the attributes and their values in sentences. You could have a script that iterates over the list of available products and generates a corresponding prompt for each one. The image below presents a few examples of prompts that describe different pairs of gloves.

Generate product descriptions with GPT-3

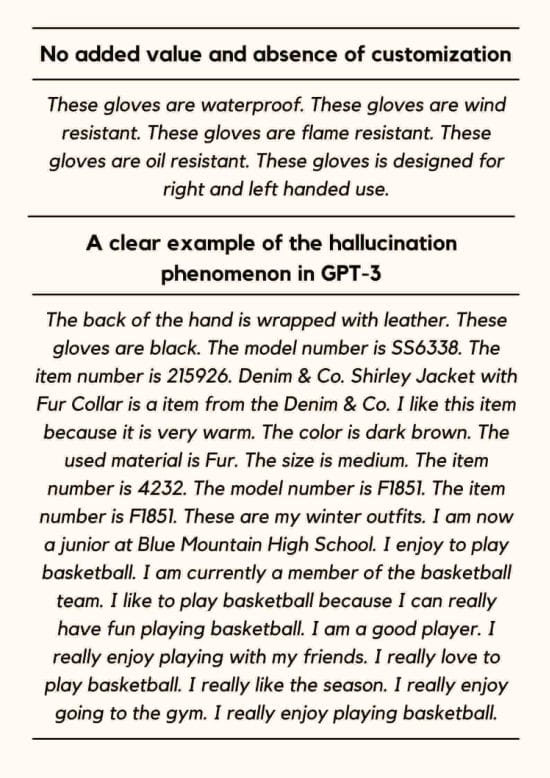

Test 1: AI-generated descriptions with the pre-trained model (without fine-tuning)

Once a product is described using a few sentences, GPT-3 can be called to return the related completion. As the intention is to generate product descriptions, the associated endpoint, create completion, is invoked. It’s one of the many other endpoints made available by GPT-3’s API. Each time this endpoint receives a well-formatted request with a prompt, it returns a completion.

While it’s possible to directly use the pre-trained model from GPT-3 to create completions, this isn’t recommended for many reasons. In fact, the quality of the completion would certainly be below the expectation in terms of attributes’ correctness, writing style, tone of voice, etc.

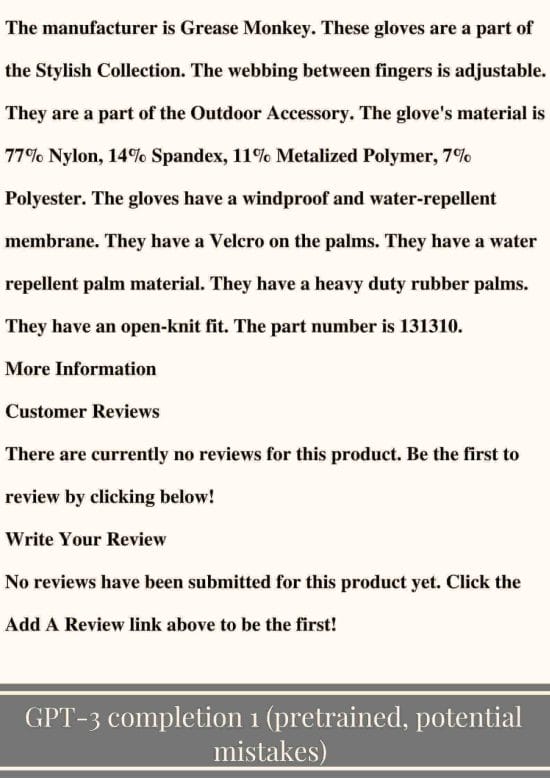

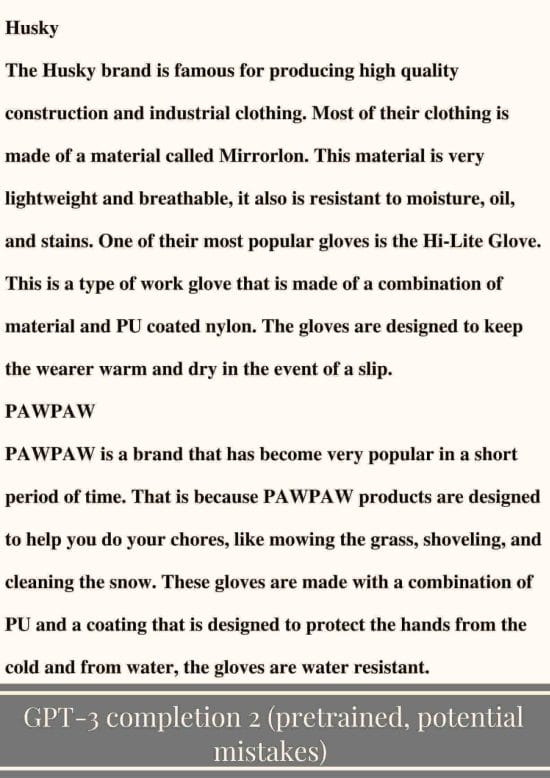

Based on the result of the following test, it’s clear that the pre-trained model isn’t capable of returning good completions. A reminder that completions refer to the automated product descriptions to generate for the pairs of gloves.

For this test, we set the maximum number of tokens to 200 while keeping the default values for the remaining parameters. Curie, one of the engines powering GPT-3, is used in our settings. As a result, you can find below a number of completions returned by the pre-trained GPT-3 model.

A reminder that the returned completions from GPT-3 contain incorrect information as well as false statements.

A first sample completion

A second sample completion

Beyond the examples above, some completions were very short as in the following examples:

- Very short completions: “The measurements of these gloves are: length: 9.5cm. These gloves are manufactured in China.“

- Very short and out of context: “Always refer to the actual package for the most accurate information“

And many other completions suffered from a variety of issues (grammatical errors, poor structure, repetitions, etc.) as shown in the following screenshot.

Fortunately, there’s a way to produce good content using GPT-3. We’ll discuss and show this in the next section.

Test 2: AI-generated product description using the fine-tuned model

The good thing about the dataset used in this work is that it also contains descriptions for a large number of products. In the dataset, you’ll find another csv file named product_descriptions.csv.

Let’s load the descriptions in a data frame as depicted in the following image.

As you notice, the product_description data frame and the attribute data frame (from the previous steps) can be joined using the product_uid column. Doing so associates every product_uid with its corresponding attributes and description.

As in any machine learning task, the data is divided into two parts:

- The first part is used to fine-tune GPT-3

- The remaining part is used to run tests during inference

Adding more data, whenever available, is recommended in most cases. Nonetheless, it’s of valuable importance to have a collection of samples that reflect the reality of the products of e-commerce. At a high level, analyzing and reviewing means evaluating the data by asking and answering questions like:

- Do the product descriptions cover the essential characteristics of the products?

- Are there enough attributes to build unique prompts?

- Is it possible to map the attributes in the prompt with the information available in the product descriptions?

- Do the samples in-hand cover a good diversity of products for the e-store?

Taking the time to review the available information is obviously what will make a difference in fine-tuning a language model. This exercise will always reveal valuable insights that will help you improve the data before running a fine-tuning task—that’s where the real magic happens.

In this work, there are 136 product descriptions available for the gloves. Out of 136 descriptions available in the dataset 110 are used to fine-tune GPT-3. After fine-tuning GPT-3, new completions are generated using the customized model. For the sake of these tests, we made sure that the completions are generated for the same pairs of gloves used in Test 1 and also that these products are in the testing set.

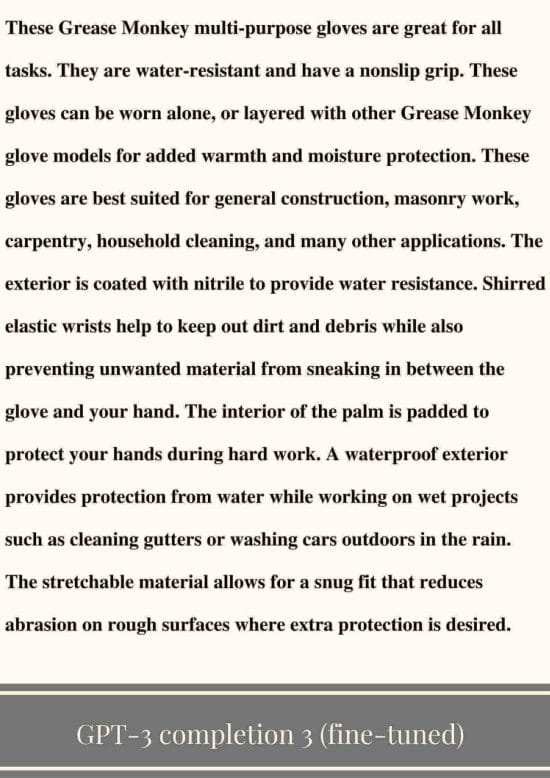

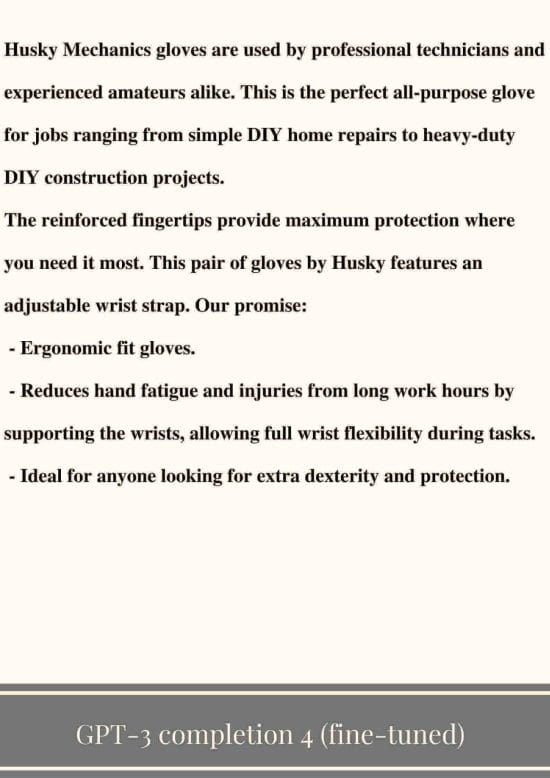

In terms of the results of the fine-tuned model, the following two images depict the generated completions.

Fine-tuned completion

Another fine-tuned completion

Discover everything you need to know about GPT-3 for product description in our last web story.

The seductive path of good enough when generating completions

As shown in the AI-generated descriptions, GPT-3 can produce convincing sentences. While the general form of the fine-tuned completions looks good, there are some inconsistencies and mistakes related to some attributes. For instance, the first product isn’t water-resistant as per the dataset’s attributes. Additionally, an SEO would need to optimize the generated content for search and ensure that target keywords are present.

As it’s clear from these AI-generated completions, fine-tuning the model allows us to achieve much better product descriptions. However, it’s clear that the limited number of gloves as well as other elements are aspects that could transform any AI-generated description into great content.

There are many elements that a content writer needs to consider when writing effective product descriptions. Similarly, there are some best practices to consider as well as some pitfalls to avoid when using AI technology to generate automated completions online.

More data isn’t always better

The performance and the accuracy linearly increase with every doubling of the number of examples as per the general best practice provided in the documentation of OpenAI. Ultimately, one could augment the data available in this demo to push further the quality of the generated completions. Keep in mind that more data is not always better unless the data consists of high-quality examples.

Data distribution is an additional element that can make a difference. One could easily explore the data of the gloves dataset from various angles. As shown in the visualization below, it’s possible to group gloves’ data by color, segment it by the gloves’ size, and color it using the gloves’ type. Doing so is a straightforward way to evaluate the distribution of attributes (color, size, type, etc.) across the dataset. With Facets, the tool used to produce the visualization below, it’s extremely simple, and at the same powerful, to analyze the patterns from large amounts of data. Hence, one could find out whether some attributes have a lot of data while others have less or even no associated data at all.

Maintaining the right tone of voice

The size of the dataset used in this work is small and consequently the number of examples to fine-tune the model is limited. However, this isn’t the only challenge. Within the fine-tuned dataset there are gloves from various brands and for various target audiences. Some of these gloves are for skiing while others are for heavy-duty tasks. Maintaining the right tone of voice is another critical element when fine-tuning the model. Grouping brands, products, and using additional elements such as social media copies when fine-tuning the model is important and it makes a big difference.

Never underestimate the power of prompt design

When choosing a product to generate completions, there are some guidelines to keep in mind:

- To build a good prompt you need relevant attributes. The product needs to have at least a minimum number of attributes. Of course, different products will have different attributes but in general some attributes could be related to the color of the product, its material, its shape, etc.

- Not only the attributes are needed but also you have to make sure that relevant product descriptions are available. They are essential to fine-tune the model.

- From an SEO perspective, it’s important that your target keywords be present within the data. Otherwise, the chances to have them in the AI-generated descriptions are slim. In fact, these target keywords that searchers include in their queries need to be part of the prompts as well as the descriptions. For example in this work, this goes back to verifying that keywords like gloves, the type (e.g., skying, heavy-duty, gardening, cycling, etc), and the target audience (e.g, professional, amateurs, etc.) are available in the data in order for these keywords to make their way into the AI-generated data.

- Pro tip: When building the prompt, be sure that the attributes are relevant and that they also appear in the descriptions (that will be used to fine-tune GPT-3). Otherwise, the accuracy of the generated description will drop.

Discover everything you need to know about GPT-3 for product description in our last web story.

Content generation isn’t the final destination

With a dataset, a product feed, or a knowledge graph at hand, false statements and mistakes can be spotted using an automated validation process. The validation is a product-specific process. It’s a critical step of the end-to-end workflow. This is a post-generation step that aims to identify the presence of important attributes as well as the correctness of their values.

At WordLift, we have conceived and implemented a validation workflow that covers the process of automated generation and factual verification from end to end. Scaling this task is possible but, so far, this technology isn’t totally capable of acting on its own without human oversight.

With the long arc of progress in the field of AI, the pendulum will not swing back. To continue to progress, everyone will need to adapt. With more and more AI-writing tools, online businesses and search engines alike need to develop new solutions and appropriate workflows to maintain growth and to provide a high-quality user experience.

Ready to Elevate Your E-commerce Content?

Discover how WordLift’s content generation can transform your product descriptions and boost your online presence. Leverage the power of the latest AI technologies, including GPT-4, to create compelling, high-quality content at scale. Explore our content generation solutions and stay ahead in the competitive e-commerce landscape.

Must Read Content

The Power of Product Knowledge Graph for E-commerce

Dive deep into the power of data for e-commerce

Why Do We Need Knowledge Graphs?

Learn what a knowledge graph brings to SEO with Teodora Petkova

Generative AI for SEO: An Overview

Use videos to increase traffic to your websites

SEO Automation in 2024

Improve the SEO of your website through Artificial Intelligence

Touch your SEO: Introducing Physical SEO

Connect a physical product to the ecosystem of data on the web