A New Chapter in WordLift’s Journey

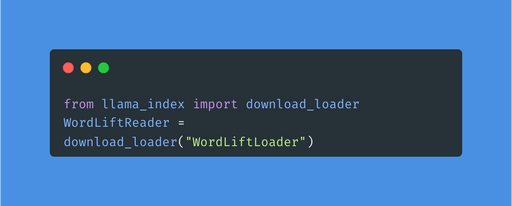

We’re thrilled to introduce WordLift Reader, a new connector for Llama Index and LangChain, in the spirit of continuous innovation towards generative AI for SEO. This feature represents a small yet significant evolution, enabling users to interact directly using their knowledge graph in engaging conversations.

The Power of Bridging Knowledge Graphs with Language Models

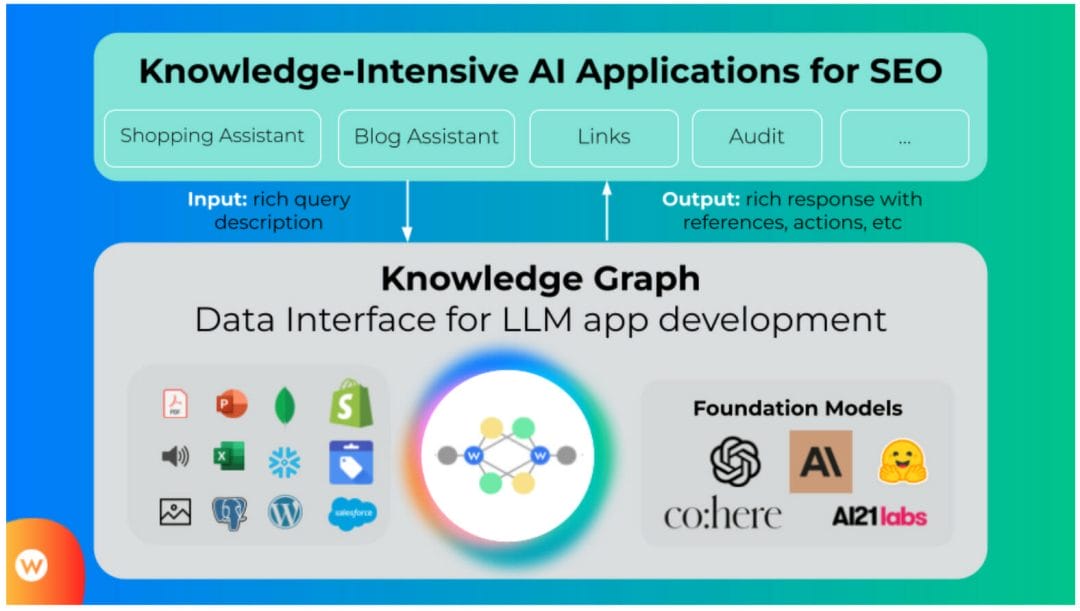

In our journey to make the web more intelligent and accessible, we’ve recognized the tremendous potential of combining the structured world of knowledge graphs with the fluid, context-aware capabilities of language models (LMs). This fusion that we call neuro-symbolic AI is a game-changer. With their ability to store and organize vast amounts of information, knowledge graphs provide a solid foundation of relevant facts and relationships. On the other hand, LLMs, with their capacity to understand and generate human-like text, bring a level of personalization that static web pages simply can’t match. We’re enhancing and fundamentally transforming the user experience by bridging these two powerful technologies.

We’re moving from a world where information is passively consumed to a digital ecosystem where users engage in rich, vibrant, and personalized conversations with the help of semantic concepts and structured data.

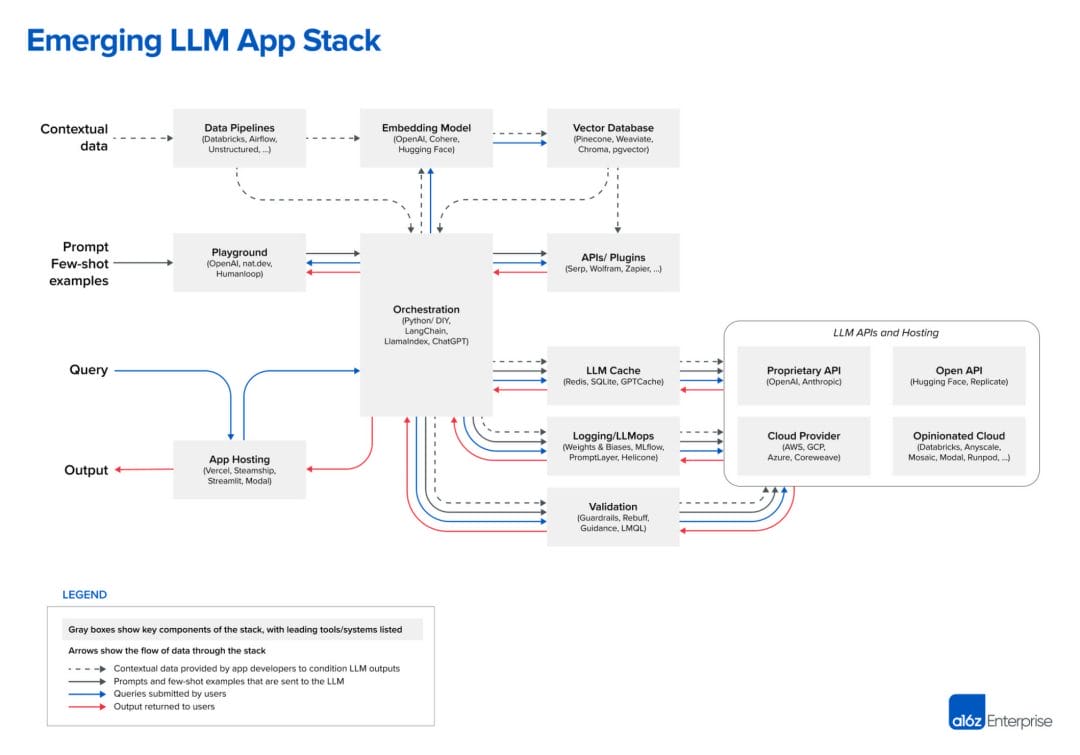

Modern Architecture for Generative AI Apps

The emerging architecture for LLM applications is a fascinating blend of new and existing technologies. I live the AI hype and the evolution of its stack with similar enthusiasm that I had back in the mid-nineties when Mosaic was the interface to the Internet.

Large Language Models (LLMs) are a powerful new tool for building software, but their unique characteristics require a combination of old ETL techniques and innovative approaches (embeddings, prompting, intent matching) to leverage their capabilities fully. The reference architecture for the Large Language Model app stack includes standard systems, tools, and design patterns used by AI startups and tech companies.

The architecture, as depicted by a16z, is primarily focused on in-context learning; this is the ability of LLMs to be controlled through clever prompting techniques and access to private “contextual” data.

The architecture can be divided into three core areas:

- data preprocessing/embedding. This is where our KG enters into action along with the new connector. It’s within the preprocessing stage that semantic data can be used and sliced before being passed through an embedding model and a set of indices (vector stores being the most common type).

- In the prompt construction stage, a series of prompts are compiled to submit to the LLM. The prompts combine a hard-coded template, examples of valid outputs, information from external APIs, and relevant documents retrieved from the vector database.

- In the inferencing stage, the prompts are submitted to a pre-trained LLM for prediction.

LlamaIndex, LangChain, Semantic Kernel and other emerging frameworks orchestrate the process and represent an abstraction layer on top of the LLM.

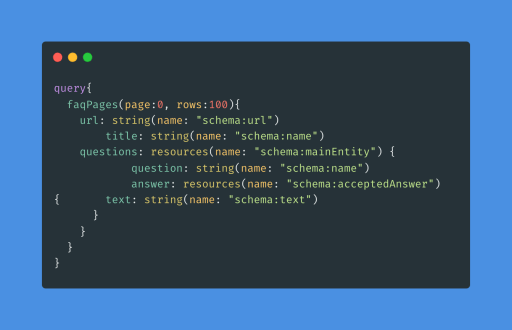

The WordLift connector loads the data we need from the knowledge graph using GraphQL, a query language introduced by Facebook that allows for precise, efficient data fetching. With only a few lines of code, we can specify exactly what data we need by extracting from our KG the sub-graphs we want. We do this by using specific properties of the schema.org vocabulary (or any custom ontology we use).

GraphQL enables the connector (WordLift Reader) to stay updated with the latest changes on the website without the need for crawling, ensuring that all conversations are always based on the most current data. This seamless integration via GraphQL between our KGs and Llama Index (or LangChain) enables the connector to transform static, structured data into dynamic, interactive conversations.

Modularization: Tailoring Conversations to User Needs

We are incorporating the concept of modularization into the new AI generative stack for SEO. We can see blocks like the “TalkMe” widget introduced in my previous blog post to “modularize” conversations; the connector has been designed to use different sub-graphs and provide specific responses. This feature allows us to tailor conversations to the specific needs of users.

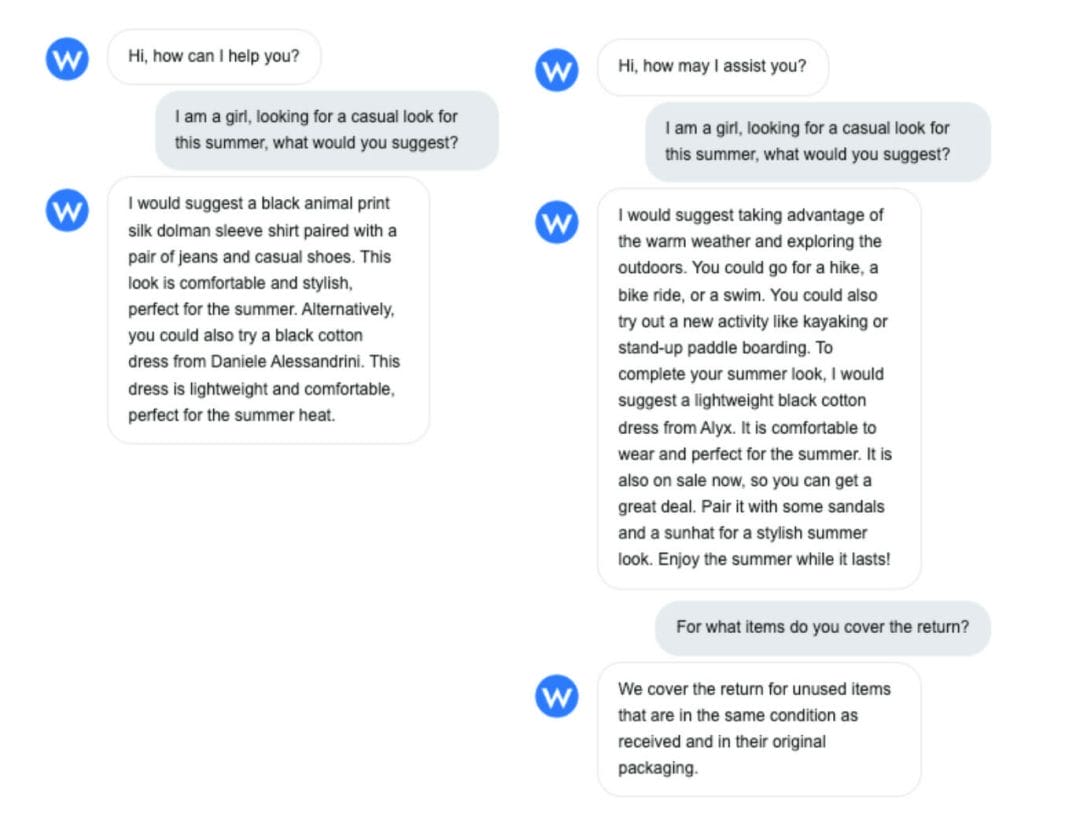

In the example below, a Shopping assistant is built by accessing the following:

- schema:Product for product discovery and recommendation;

- schema:FAQPage for information on the store return policy.

Let’s review the dialogue intents that we can address using only product data and using product data and Q&A content.

The power to leverage your Knowledge Graph built using schema.org for LLMs. On the left the AI uses only schema:Product; on the right the AI uses schema:Product and schema:FAQPage.

Here Is How To Connect With Your Audience Using AI Agents

The notebook below provides a reference implementation that uses our demo e-commerce website. To see how things work, open the link and follow the steps.

Ready to jump to the code? 🪄 wor.ai/wl-reader-demo (here is a Colab Notebook to see how things work).

📖 Here is a link to the documentation.

We’ll build an AI-powered shopping assistant using LlamaIndex and the Knowledge Graph behind our e-commerce demo website (https://product-finder.wordlift.io/). You must add your OpenAI API key and WordLift Key to run this tutorial. We’ll begin by setting up the environment and installing the required libraries like langchain, openai, llama-index, and API keys.

The code’s core part is focused on using the WordLiftReader class to build a vector index of the shop’s products.

We’ll also see how to create multiple indices based on different schemas classes like schema:Product and schema:FAQPage. This allows the AI agent to handle different types of user queries effectively. For example, the agent can suggest products based on user preferences or provide information about the shop’s return policy.

To see our connector in action, watch the video with Andrea Volpini, Teodora Petkova and Jason Barnard. Here, you can learn how to create an enriching and interactive experience using the data in the knowledge graph, bridging the gap between users and content.

Conclusion

Introducing our new connector marks a significant step in our mission to make the web smarter and more accessible. By transforming your knowledge graph into interactive conversations, we’re enhancing the user experience and paving the way for more effective SEO implementations.

We’re excited to see how our users will leverage this new tool to create more engaging, personalized, and optimized web experiences.

Stay tuned for more updates as we continue to innovate and push the boundaries of what’s possible with a synergised combination of KG and LLMs!

References and Credits 📚

Great content to read to learn more about LLM + KG:

- From classic to modern era: what is a dialogue by Teodora Petkova

- AI is like an Iceberg by Tony Seale (a survival guide)

- LLM Ontology-prompting for Knowledge Graph Extraction by Peter Lawrence

FAQs

How is LlamaIndex different from LangChain?

LlamaIndex and LangChain are two open-source libraries that can be used to build applications that leverage the power of large language models (LLMs). LlamaIndex provides a simple interface between LLMs and external data sources, while LangChain provides a framework for building and managing LLM-powered applications. In summary, LlamaIndex is primarily focused on being an intelligent storage mechanism, while LangChain is a tool to bring multiple tools together. At the same time, you can build agents using only LlamaIndex or LlamaIndex as a LangChain agent tool.

Discover how this innovative technology is revolutionizing language processing and unlocking new possibilities in AI-driven applications. Explore this LangChain Guide

What orchestrators are available to interface a large language model?

Different orchestration frameworks like LangChain and LlamaIndex can help us abstract many of the details of prompt chaining, interfacing with external APIs and extracting knowledge from different sources. The most famous are the Semantic Kernel by Microsoft, FlowwiseAI, Auto-GPT, AgentGPT and BabyAGI. Similar solutions include JinaChat or the Italian Cheshire Cat AI.

Must Read Content

The Power of Product Knowledge Graph for E-commerce

Dive deep into the power of data for e-commerce

Why Do We Need Knowledge Graphs?

Learn what a knowledge graph brings to SEO with Teodora Petkova

Generative AI for SEO: An Overview

Use videos to increase traffic to your websites

SEO Automation in 2024

Improve the SEO of your website through Artificial Intelligence

Touch your SEO: Introducing Physical SEO

Connect a physical product to the ecosystem of data on the web