Autonomous AI Agents in SEO

Discover how AI Autonomous Agents are reshaping SEO for businesses in the rapidly evolving landscape of user interfaces.

SEO is changing, and it is meant to become a lot different from what it used to be, primarily because of the changing nature of the user interface. Generative AI transforms how we create, as its name might imply, and how we access, find and consume information.

This doesn’t mean that SEO is dead; quite the opposite. It is finally becoming something different. And, there will not be a unifying horizon as we had with Google for the last 25 years (yes, happy B-day, Google!). New gatekeepers are emerging while Google is fighting back to retain its dominant position at various levels:

- User experience: with the introduction of the Search Generative Experience (SGE);

- Knowledge acquisition: with the advancements in robotics and autonomous driving, AI models start to experience the world (also known as knowledge embodiment) as we do to gain additional experience;

- Knowledge representation: as seen in Gemini, Google’s latest large foundation model, new abilities emerge when a model is trained seamlessly using all media types simultaneously (cross-modality). At the architectural level, Google, starting with PaLM, invests in sparse models (as opposed to dense architecture) that more efficiently integrate with external APIs, knowledge bases and tools.

In this article, I will introduce some examples of how AI Autonomous Agents can generate content or conduct simple SEO tasks and the need for a multi-sided approach when developing such systems.

It is no accident that human brains contain so many different and specialized brain centers.

Marvin Minsky, 1991

The Anatomy of an AI Autonomous Agent

What are they

An AI agent, as the paper “The Rise and Potential of Large Language Model Based Agents: A Survey” explains, is essentially built of:

- a brain primarily composed, in our vision, by the language model paired with a knowledge graph, acting as its long-term memory;

- a perception layer that interacts with the environment (the environment might include the content editor or the SEO orchestrating the task) and;

- a set of actions that the agent can accomplish. The tooling it has. From the APIs, it can use the sub-graphs of its memory (all the products in the catalog or all the articles written by a given author).

The Knowledge Graph is ‘the book’ the language model ‘reads‘ before making its prediction. The Knowledge Graph acts as the persistent memory layer of the AI Agent. The short memory (the conversation turns in the chat session) can also be stored back in the KG, but this is less relevant; their use remains limited to the user session.

The content generated or the Agent’s keyword analysis is stored in triples using the reference ontology or schema vocabulary. This way, the systems evolve and learn from interacting with content editors, marketers, and SEOs within the same organization.

The Evolution of AI Agents

From Symbolic to Deep Learning and Back

Let’s begin our journey in the early ’80s when artificial intelligence was a developing field, primarily rooted in symbolic agents. These agents represented a first attempt to model human cognition in binary code. They relied on symbolic logic, explicit rules, and semantic networks. Extremely elegant and yet computationally limited and not truly scalable.

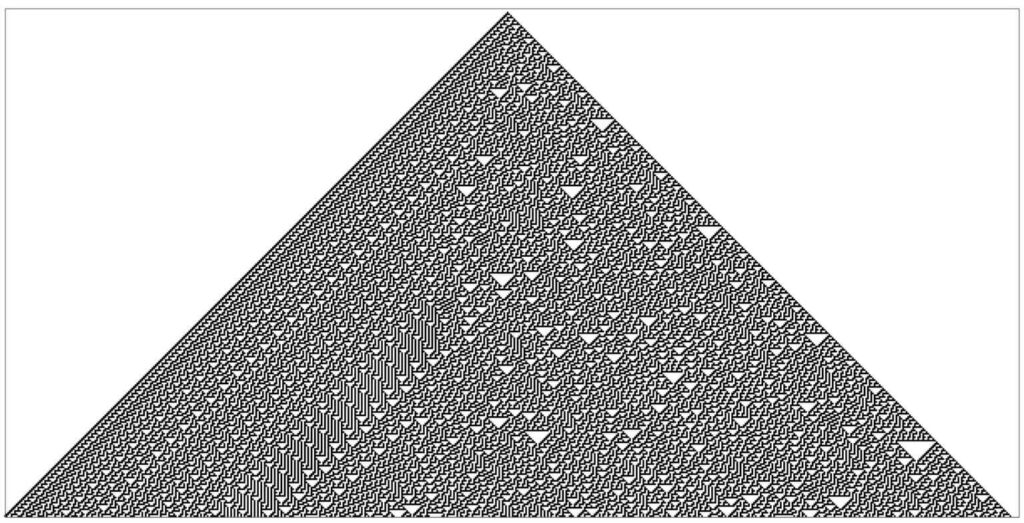

Moving forward, we witnessed the development of reactive agents. These agents took a different route by reacting directly to environmental stimuli. There were no internal models, ontologies, or complex reasoning; they operated much like rule 30, the cellular automata discovered by Stephen Wolfram: a set of simple rules creating complex behavior.

Now, let’s traverse to reinforcement learning-based agents, with AlphaGo being the most beautiful representation. These agents found a harmonic blend of experience and optimisation. They interact with their environments and learn optimal behaviours guided by a reward system. These agents can learn intricate policies from high-dimensional inputs without human intervention.

Fast-forwarding to today, we arrived at the era of LLM-based agents.

Reading an insightful paper titled “The Rise and Potential of Large Language Model Based Agents: A Survey.” allows us to review how we can interact with them, their anatomy and the implications for the SEO sector.

Large Language Models as Reasoning Engines

The Brain of an AI Agent

Since the mass introduction of ChatGPT in late December of 2022, a new functional layer has been added to the traditional web application stack. A layer that is radically transforming the Internet, giving applications and services the ability to talk and, somehow, reason in a similar way as humans do.

In a few months, we have transitioned from leveraging basic language models to instruction-based language models to AI agents (Auto-GPT, GPT Baby and now Meta GPT). Each step lays the foundation of a sequence-to-sequence revolutionary neural network architecture known as transformers and its attention mechanism (a mathematical wonder that helps models keep the focus on tokens that matter).

This transition happened as we realized that being able to recognise a pattern in an isolated state is not the same as interpreting the same pattern when it is a component of a more complex system. In other words, a Large Language Model doesn’t know what it knows. While it can help close knowledge gaps, it is designed to hallucinate and will always remain unreliable.

By providing instructions during training, things have drastically improved; models rather than predicting similar sentences (What is the capital of Italy? >> What’s Italy’s capital? What is the centre of power in Italy?) have learned how to answer the questions (What is the capital of Italy? >> The capital of Italy is Rome). Yet, there is yet to be a solution to the fundamental unreliability as knowledge representation remains a problem. There is no “single best way” to represent a given knowledge domain. Each area requires its level of connection density and its rule-based ontology.

Peter and Juan are apostles

Giuseppe Peano

The apostles are twelve

Are Peter and Juan twelve?

The secret of what something means lies in how it connects to other things we know. That’s why it’s almost always wrong to seek the real meaning of anything. A thing with just one meaning has scarcely any meaning at all.

Marvin Minsky, “The Society of the Mind”, 1987.

An Agent implements what Minsky suggested in the early days of AI: the ability to combine “the expressiveness and procedural versatility of symbolic systems with the fuzziness and adaptiveness of connectionist representations”. Moreover, an Agent becomes the connecting tissue that blends existing computational functions and web APIs. It only needs to understand the tools (agencies) it can use and the mission of its task.

GraphQL as a Data Agency

Unless a distributed system has enough ability to crystallize its knowledge into lucid representations of its new subconcepts and substructures, its ability to learn will eventually slow, and it will be unable to solve problems beyond a certain degree of complexity.

Marvin Minsky, 1991

GraphQL is a querying language coupled with an execution engine designed with service APIs to help us extract the data we need from a knowledge base or to add new data.

Building a Data Agent that “talks” with our GraphQL end-point

Let’s do a first example by connecting a simple agent to a knowledge graph.

In this ultra-simple implementation, our data agent can:

- translate natural language into a GraphQL query,

- execute the query,

- analyze the results and provide an output as indicated by its prompt.

If they find a parrot who could answer to everything, I would claim it to be an intelligent being without hesitation.

Denis Diderot, 1875

Autonomous AI Agents for Content Creation

The new typewriter

Given the fragmented nature of the audience and the need for trustworthy relationships between authors and readers, content generated by advanced language models shall take several factors into account:

- Syntax: This refers to the grammatical structure of the text, essentially the backbone of any language. Syntax is governed by rules dictating how words are assembled into sentences. Advanced language models learn these syntactic rules by breaking down terms into smaller units called ‘tokens’ and identifying patterns in large text datasets.

- Semantics: This is where syntax and meaning intersect. In language, a word is more than just a string of characters; it represents a point in a multidimensional space of concepts. In the field of Natural Language Processing (NLP), this is modelled through techniques like embeddings and graph representations.

- Praxis: This involves the real-world application and use of language in social settings. Praxis adapts over time and can be influenced by altering the prompts given to language models, thereby changing the tone and context of the generated text. We use structured data and the knowledge graph to create the context for the autonomous agent.

We can produce grammatically sound, meaningful, and contextually appropriate content by understanding these elements. These layers characterize the human language in a framework initially introduced by C.W. Morris in 1938.

Introducing Graph Retrieval-Augmented Generator (G-RAG). An Agent that writes content like you do

Let’s advance now on a G-RAG Agent. Retrieval Augmented Generation (RAG) is a technique that combines a retriever and a generator to improve the accuracy of the prediction.

The retriever is responsible for searching through an external source (like a knowledge graph or a website’s content) and finding relevant information. At the same time, the generator takes this information to produce a coherent and contextually accurate response.

RAGs enhance the performance of Large Language Models (LLMs) by making them more context-aware and capable of generating more accurate and relevant responses.

The continuous nature of deep neural networks (like the one used by GPT models) makes an AI Agent capable of inductively analyzing large amounts of data, extracting patterns and predicting the upcoming sequence. The question we have to ask is though when designing these tools:

- What data shall we feed into these systems?

- What information creates the dialogic interaction (or the content) we want?

- How can we steer a transformer-based language model to provide the correct answer (what does the prompt look like)?

- What tools does the Agent need to have in its toolbox?

- How do I describe these tools so the Agent can effectively use them?

The discrete nature of Knowledge Graphs, designed to organize a wealth of data by turning facts into triples (subject-predicate-object) and information into relationships, makes them a strategic asset for building such applications. Still, we cannot only rely on a symbol-oriented solution and language models.

When developing AI Agents for SEO, we realize there is no “right way” and that the time has come to build systems that combine diverse components based on the specific task.

An emerging trend involving orchestrator frameworks like LangChain and Llama Index and graph providers like NebulaGraph, TigerGraph and WordLift, among others, are pioneering a new paradigm: using knowledge graphs in the retrieval process. Semantically rich data, modeled with an ontology, is used to train the language model, build the retrieval system, and guard-rail the final generation.

Structured Data: a tool for the Agent to write SEO-friendly content using the content of your website

While it may be tempting to use advanced language models like ChatGPT or GPT-4 (with sophisticated prompts) for content creation, it is akin to asking a random person on the street how to design an SEO campaign for an international brand. While common sense may yield valuable insights, the overall strategy must be revised. Similarly, just as Google utilizes structured data to interpret the content of webpages, a Graph RAG (a Recursive Answer Generator based on a Knowledge Graph) can employ structured metadata to construct an index that serves as a retrieval mechanism. The agent will be instructed to find the relevant documents to be fed into the prompt.

Generate Content by using Structured Data

Much like the process outlined in fine-tuning GPT-3.5, structured data empowers us to parse through a website’s content, identifying key attributes that are crucial for building an efficient Retrieval-Augmented Generation (RAG) for content creation. Below is a practical example.

We employ WordLift Reader to generate one or more vector-based indices for our articles, specifically those marked as schema:Article. We then configure the fields that will be indexed (known as text_fields) and designate the fields to be used as metadata (known as metadata_fields). The reader automatically crawls the web page when its URL is added to the list of text_fields. Each index thus created can serve as a tool for our AI Agent.

Let’s now generate a brief paragraph on the fusion between knowledge graphs and LLMs using the content from this blog. The retriever has identified a blog post from our blog, and the LLM (a fine-tuned version of Chat-GPT 3.5 Turbo) is generating the expected paragraph. We use the detected sources (previously written blog posts) to steer the completion. Sources also represent a valuable opportunity:

- To explain why the LLM is writing what it is writing (explainability builds trust with both the reader and eventually Google),

- To add relevant internal links that will guide the reader further in exploring more content from the website.

An Autonomous AI Agent for Entity Analysis and Content Revamp

Your new Semantic SEO Agent

We are developing an agent designed to analyze search rankings and conduct entity analysis on existing web pages.

The following prompt describes the core function of this agent: it extracts entities from various web pages, identifying potential gaps in content.

When provided with a specific search query, the agent will determine which entities are essential for our web page to achieve a higher ranking.

As illustrated below, the agent uses the WordLift Content Analysis API to extract entities from a webpage on merkur.de (an established news outlet for Bavaria). Behind the scenes, the agent communicates with the API using the WordLift key. From the retrieved list of entities, it then hones in on those pertinent to the target query “Oktoberfest 2023”. This is terrific as false positive results or entities that don’t align with the search intent are discarded without human intervention.

Agents have real-time memory, enabling seamless continuation of our conversation. This allows me to instruct the agent to conduct another analysis, comparing the main entities from a competitive URL. The agent will then identify and present the gap — highlighting entities absent in the initial URL but present in the second one (Carousel and Lederhose seem relevant to me).

In the following interaction, the agent uses the WordLift Content Expansion API. This API interprets the content of a webpage and augments it by referencing desired entities for expansion. I’m requesting the agent to enhance merkur.de’s Oktoberfest webpage by incorporating the two entities identified in our previous conversation (Carousel and Lederhose).

The code is still an early draft, but you can look at it to understand how things could work. Add your WordLift key (WL_KEY) and OpenAI key (_OPENAI_KEY).

Conclusions and Future Work

As WordLift embarks on its mission to integrate Agents for SEO into its platform, we’ve gleaned several pivotal insights. We’re in an era where neuro-symbolic AI takes centre stage; the fusion of logic and knowledge representation markedly enhances LLM accuracy. The combined strength of a data fabric and a Knowledge Graph (KG) proves essential for producing distinct content.

Allocating substantial time to set up agent guardrails is non-negotiable. For most stakeholders in an AI initiative, explainability transcends being a mere luxury—it’s a fundamental requirement. Here, KGs emerge as instrumental. The advent of a G-RAG elevates the dependability and credibility of language applications.

Yet, as we progress, we must acknowledge the technology’s imperfections. We must be highly cautious of the potential security vulnerabilities when launching AI agents on the open web.

Having said so, I firmly believe that autonomous AI agents will help us augment:

- the mapping of search intents to personas

- the evaluation of many content assets (is this article helpful?)

- the fact-checking as well as the ability to keep content updated. In fact, WordLift’s recent article on AI-powered fact-checking delves into how AI can significantly improve the accuracy and timeliness of content verification.

- the audit and interpretation of technical SEO aspects (check our early structured data audit agent to get an idea)

- the prediction of both traffic patterns and user behaviors.

Browse the presentation on AUTONOMOUS AI AGENTS for SEO that Andrea Volpini gave at SMXL in Milan.

Additional Resources

While I have been using primarily LangChain, Llama Index, or directly the OpenAI APIs, non-coders have multiple ways to venture into AI Agents.

Here is my list of tools from around the Web:

- CognosysAI: This powerful web-based artificial intelligence agent aims at improving productivity and simplifying complex tasks. It can generate task results in various formats (code, tables, or text).

- Reworkd.ai – AgentGPT: Allows you to assemble, configure, and deploy autonomous AI Agents in your browser.

- aomni: An AI agent that crawls the web looking for information on any topic you choose, particularly focused on sales automation

- Toliman AI: Another viable option for online research.