Master Topical SEO: The Science, Engineering and Research Behind Topic Clusters

Design your Topic Cluster SEO strategy using the WebKnoGraph framework to leverage the potential of interconnected, marketable content.

As this post is quite extensive and packed with information, we’ve organized it into smaller, more digestible chapters for your convenience. Whether you prefer to start from the beginning and read through to the end or dive straight into the sections that pique your interest the most, feel free to navigate as you please. Enjoy exploring!

TABLE OF CONTENTS:

- Article outline

- Who can benefit from debunking the case for topic cluster SEO?

- WebKnoGraph framework: executive summary of the SEO link graph engineering design solution

- How Mixing SEO, Computer Science, Engineering and AI background help

- Why contextual internal linking and topical SEO matter?

- Is your SEO foundation resilient?

- Innovative mindsets change the world for the better

- Managing expectations

- Focus on first-party SEO data

- The business model influences SEO, and conversely, SEO supports and future-proofs robust business models

- A website is a directed cyclic graph and a subgraph of Word Wide Web (WWW)

- Applied SEO: Algorithm System Design for Optimal Internal Linking

- PageRank and CheiRank explained

- Modification of the TIPR model for SEO

- How to define conversion for different industries

- Visualizing the modified TIPR model for SEO

- A debate and an open question on picking independent variables in the weight formula

- Should I perform automatic, semi-automatic or manual graph link restructuring?

- How should I optimize my link graph after identifying super-nodes for SEO?

- The interplay between a link graph and a knowledge graph optimization

- What challenges are presented by this approach and algorithmic framework?

- How does WordLift solve link graph restructuring and internal linking architectural problems?

Article Outline

In this article, we’ll outline a strategy to optimize your internal link network, enhancing your ability to streamline SEO efforts and direct attention toward crucial money pages on your website by using the WebKnoGraph framework.

Who can Benefit From Debunking the Case for Topic Cluster SEO?

Ideally, you’re familiar with link graphs and versatile in joining data from multiple sources. You understand and experiment with internal linking optimization and are willing to explore computer science and engineering concepts to improve yourself further.

WebKnoGraph Framework: Executive Summary of the SEO Link Graph Engineering Design Solution

The finalized algorithm will gather website data that includes crawlability, linking (using PageRank & CheiRank), and conversions, merging them into a unified metric through a weighted formula. However, it’s crucial to thoroughly review the specifics and nuances discussed in the next sections. During the subsequent phase, we will leverage this weighted formula to compute the significance of nodes in relation to their respective neighborhoods. Ultimately, this data will be employed to adjust our link graph network, optimizing the search experience and topical authority for a particular website. Engineering the graph topology, WebKnoGraph, is the focus of this article.

How Mixing SEO, Computer Science, Engineering and AI Background Help

I’ve been involved with SEO since 2016, having been part of the computer science and engineering community since 2012, which adds up to about a decade of experience. Throughout this time, I’ve engaged both within and outside of WordLift: I’ve worked agency-side on two occasions, spent two stints in-house, and freelanced for over 4 years. My endeavors have spanned various industries, primarily focusing on small and medium size businesses (SMEs) poised for significant growth.

My tenure at WordLift and previous engagements with other stakeholders (albeit to a limited extent) taught me the importance of championing users’ needs and speaking candidly, even when it’s uncomfortable, directly to top-level executives. We also emphasized collaborating across different skill sets to tackle problem-solving, as our clients’ demands often required such a multifaceted approach. Ultimately, we aimed to deliver top-notch work to our clients while ensuring mutual benefit.

I’ve gone through numerous articles discussing the topic cluster SEO, yet what I found is that there’s a lack of a detailed exploration of the scientific and engineering principles underpinning this concept. The engineers at Omio and Kevin Indig, with his TIPR model, came closest to what I aimed to achieve, but there are still nuances and aspects that need further refinement and exploration.

Topic cluster SEO is essential for small and medium size businesses (SMEs) aiming to expand their content strategies via programmatic SEO. It’s crucial to lay a solid and well-thought-out foundation beforehand. The approach I will outline is tailored for SEO-savvy ventures with the resources to develop and refine this methodology internally. This is precisely why I’m open-sourcing my idea: to foster collaborative learning and encourage contributions for an increasingly knowledgeable SEO community.

Why Contextual Internal Linking and Topical SEO Matter?

I have a strong passion for SEO, but what excites me even more is creating a sense of predictability in an industry that’s often unpredictable. I firmly advocate for taking complete control and ownership of our strategies, and one area that holds significant importance is internal linking. By structuring your website logically and making it user-friendly, we empower people to easily discover what they’re seeking and swiftly navigate to your key pages.

The synergy between Topical SEO and internal linking is pivotal in achieving this goal. However, I won’t delve into the general approach and definitions of topical hubs, also known as topic clusters or authority hubs. I believe there are numerous excellent articles out there where my SEO and engineering colleagues have done an outstanding job of covering these topics. Instead, I’d like to share some case studies and valuable links that I personally found to be most enlightening:

- TheGray company’s How to Level Up Internal Linking for SEO, UX, & Conversion

- Adam Gent’s collection of case studies on internal linking

- Cyrus Shepard’s case study of 23 Million Internal Links

- MarketBrew.AI’s blogpost on Leveraging the Power of Link Graphs to Improve Your SEO Strategy

- Roger Montti’s Reduced Link Graph – A Way to Rank Links

- There are numerous others, and each one of them is truly fantastic!

Is your SEO Foundation Resilient?

Internal linking is super important for high-growth companies and SEO teams that aim to scale their SEO game. However, bad foundations crack after some time, so the right moment to ask yourself whether you’re ready to scale is now. Should you revise your current implementation? Was the current implementation mindfully developed with SEO in mind?

… bad foundations crack after some time

Solid Foundations are Crucial

When discussing data quality, building effective data pipelines, or constructing link graphs for SEO purposes, the significance of a robust foundation cannot be overstated. Scaling with programmatic SEO is only possible with such a sturdy base. The critical question is: what constitutes a solid foundational link graph structure?

Let’s set this aside momentarily (I’ll address this comprehensively below, I assure you) and instead concentrate on the disparity of the actions of certain companies and those of others.

Innovative Mindsets Change the World for the Better

From my experience, we don’t require a time-travel machine to visualize the past or foresee the future. Companies have reached such a high level of maturity in SEO that they’re at least a decade or more ahead of their counterparts. Conversely, others are lagging, stuck in a state akin to wishing “Happy New Year 2010.”

As a holistic marketing leader, your role isn’t solely about strategizing for present trends. It’s about anticipating the forthcoming two decades and structuring your roadmap to align with these future needs. Your responsibility lies in envisioning what’s next and preparing your strategies accordingly.

Let’s embark on this journey together and delve deeper into every aspect and intricate detail of topic cluster SEO.

Managing Expectations

As I’m prioritizing the engineering aspect of topic cluster SEO, specifically internal linking and link engineering, I’ll proceed under the assumption that the following prerequisites have been met:

- This article forms the basis for further discussion and can be adapted through user feedback.

- Topic cluster SEO represents an internal linking challenge. To streamline and concentrate on the central issue of internal linking, a data lake or warehouse is available for merging SEO and UX data from various 1st party data sources.

- Link issues are fixed beforehand: 4xx + 5xx errors, sync between sitemap and robots.txt on blocking. Also, I presume Javascript links can be detected and crawled appropriately.

- Your topic cluster SEO project is already prioritized on your company’s roadmap.

Focus on First-party SEO Data

I’ve reiterated this point several times, yet I can’t stress enough how crucial it is to prioritize the data you own or that Google provides directly to build around it. First-party data is a cornerstone in every SEO strategy, and many SEO practitioners fail to leverage it effectively before falling prey to the allure of the latest SEO software.

How SEO and Business Models Help Each Other for Long-Term Success

A successful, fully implemented, or partially executed solution hinges on understanding what constitutes an effective internal linking network from the start. Kevin Indig, in his fantastic SEO piece, wrote that:

“The best internal linking structure depends on your business model.”

Kevin Indig

One type of site leads all users to one or a few landing pages. The other has users sign up on almost every page. Therefore, the first phase of the problem is to understand what internal linking stands for, recognizing that internal links aid in discovering all pages while also functioning as supplementary ranking signals.

We’ve learned that comprehending the ideal structure of a high-quality internal linking network hinges on the specific business model. To recap Kevin’s perspective, there are two kinds of business models regarding link graphs and networks:

- Centralized: This model revolves around key pages that significantly drive profit and user journeys. The entire architecture is centered around these pages because most signup options and calls-to-action (CTAs) are concentrated there.

- Decentralized: CTAs and signups are evenly spread across all page templates in this model. Therefore, it becomes crucial to meticulously design the flow of PageRank and CheiRank across the network since conversions are happening everywhere. But are genuinely all pages built equal?

There are even more nuances than this. The key takeaway here is that an SEO expert needs to function strategically, similar to how SEM experts operate today. It involves adjusting the priorities by bridging the gap between the online audience and the specific requirements of the organization. Depending on the situation, the SEO expert can determine whether it’s more beneficial to focus on link depth and indexation or prioritize revenue generation on a bi-weekly or monthly basis. The platform will then handle the remaining tasks by sorting through vast amounts of data to determine the best way to organize the links.

Let’s add some additional important factors that we’ll need to juggle with:

- Winning and modern websites follow the “topic over keywords” principle. The message is clear: semantic relatedness is essential!

- We also know that frequent crawl logs indicate that a page is important. In other words, periodic crawl logs are correlated to increased page authority.

How do we engineer a good foundation for user-centric journeys, considering topical contextuality & relevance, quality link distribution, and balancing business objectives like conversions?

Let’s remind ourselves of this picture once again:

… bad foundations crack after some time

It’s important to remain positive: applying a computer science and engineering approach to a new field, such as SEO, can provide us with a potential solution. The learning is to keep your SEO-house clean:

… but a solid SEO can be built on a resilient foundation

Now, let’s go back to the original problem: how to engineer a good foundation, assuming we have the first-party data? My mum always used to say that “a problem well-stated is a problem half-solved” (attributed to Charles Kettering, who was head of research at General Motors).

It’s all about asking the right questions. Therefore, I will ask a new question: what is a website, essentially and technically speaking?

A Website is a Directed Cyclic Graph and a Subgraph of Word Wide Web (WWW)

The first intuition is to realize that a website is a Directed Cyclic Graph!

Let’s explain this further:

- Websites represent directed graphs because links have a specific direction from the source page to the destination page.

- Websites represent cyclic graphs since there are loops or cycles in the graph. For example, the second-level nodes (links) from the picture above link to each other (also the leaves back to the homepage).

- Website graphs are weighted graphs since links have different weights or costs associated with them. For example, external links can be seen as more valuable than internal links (this is just an assumption; I’ll focus on what we can control in this article, for instance internal links).

Here’s a website graph visualization (sampled data) from the WordLift’s website:

Each page is a node on the graph representation. There are multiple super-nodes that link to many important pages and are not properly organized. This is a good optimization opportunity. A super-node is either a topic cluster or a super-page that has links from important pages and links to multiple important pages, based on a weight formula.

Supernodes are visualized in the following picture:

Content writing and content differentiation can be challenging too: if the writing is not distinct enough, many pages will be bucketed into the same topical cluster.

I am not the first one to speak about this. I expect Mike King from iPullRank to cover these topics in his upcoming book “The Science of SEO: Decoding Search Engine Algorithms“. Kevin Indig spoke about this to some extent in his TIPR model articles and Omio engineering covered graph theory approaches for applied SEO on their engineering blog.

Applied SEO: Algorithm System Design for Optimal Internal Linking

Our initial challenge is to establish the importance of each node, which is a blend of the node’s inherent value and its connections (links) to other significant nodes within the graph. We must create a weighted formula that allows us to represent the business value of each node!

I hold Kevin Indig in high regard, and this piece wouldn’t have come together without the ideas he previously shared in his blog articles. I’m fulfilling my duty and possibly pursuing Kevin’s desire to expand further, analyze, and enhance his work. It’s a privilege to undertake this endeavor, so let’s dive in!

The True Internal PR (TIPR) model is an excellent initial framework. However, the challenge lies in the lack of a link to the business aspect, specifically conversions. Instead of focusing solely on developing the logic for backlinks, I propose integrating conversions into the formula. This approach provides a more comprehensive understanding, offering contextual relevance and helping prevent negative user experiences. Conversions, apart from being a sales/business metric, also function as a UX metric – a purchase often signifies satisfaction with the service. Admittedly, it’s not as straightforward as it sounds, but let’s summarize it that way for simplicity’s sake.

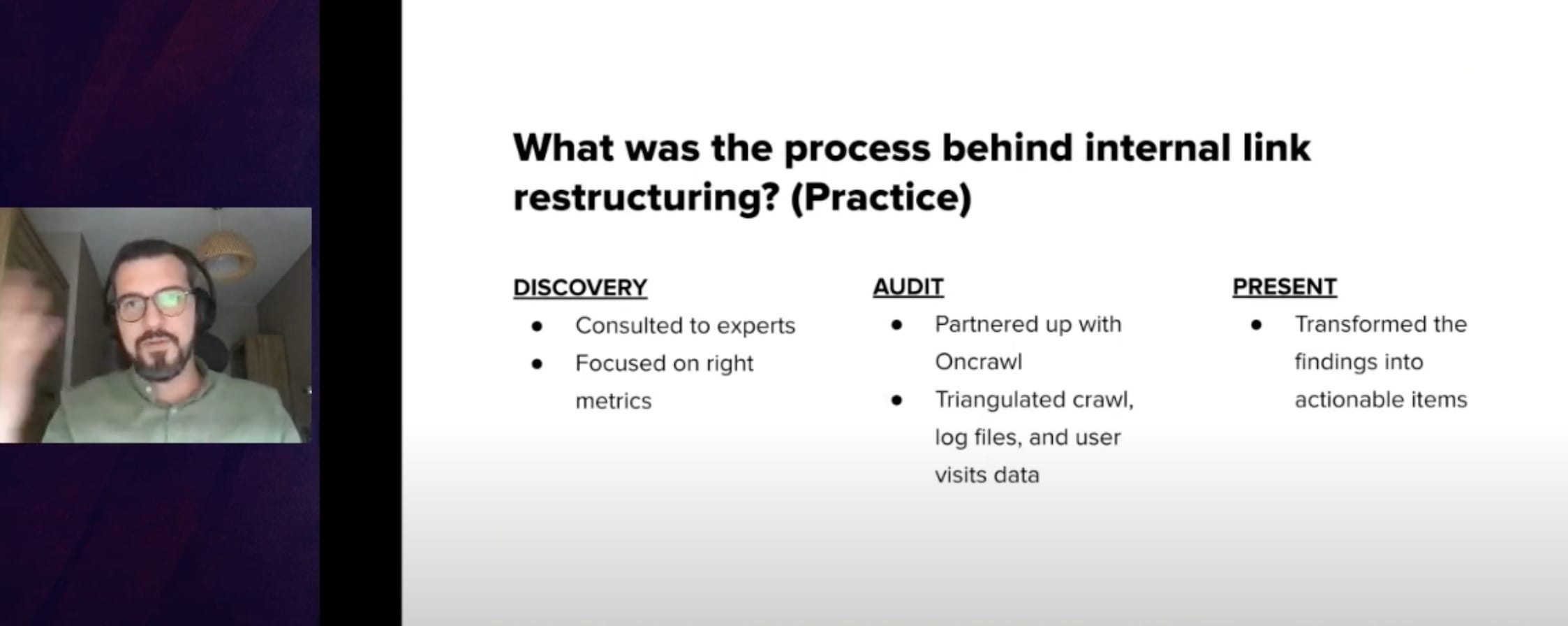

In addition to exploring the TIPR model, I came across the work of Murat Yatagan, who employed a comparable approach, albeit not structured as a definitive formula or model for direct emulation. Take a moment to examine the screenshot below:

Take note of the triangulated crawl, log files, and user visit data. It bears a resemblance to what Kevin intended to accomplish but presents some distinctions. However, is user visitation a reliable indicator for SEO success?

PageRank and CheiRank Explained

PageRank and CheiRank represent two distinct link analysis algorithms utilized for gauging a webpage’s significance. These algorithms find application in search engine operations to determine website rankings in search results. Additionally, website owners employ them to fine-tune their sites for improved search engine optimization (SEO).

PageRank

PageRank, conceived by Google’s founders Larry Page and Sergey Brin, is a link analysis algorithm rooted in the concept that a page holds importance if it garners links from other significant pages. Calculating a PageRank score involves evaluating both the quantity and quality of links directed towards a page. Links originating from high-quality pages carry more weight compared to those from lower-quality pages. The sum of all PageRank values is one.

CheiRank

CheiRank, created by Hyun-Jeong Kim and a team of collaborators, is a link analysis algorithm that determines the relative importance of URLs by assessing the weight of outgoing links in the link graph. A high CheiRank serves as a reliable indicator of an essential hub-page. Notably, the sum total of all CheiRank values equals one.

Modification of the TIPR Model for SEO

I’d modify the approach for PageRank and CheiRank to create UniversalPageRank (identifying all incoming links regardless of their origin—whether from the same or different websites) and UniversalCheiRank (identifying all outgoing links regardless of their destination—whether to the same or different websites). I’ll also switch from user visits to conversions.

How to Define Conversion for Different Industries

The definition of conversion differs across industries, shaped by their unique business objectives. In e-commerce, it typically refers to finalized purchases or different stages within the sales process. In the realm of SaaS, it could involve actions such as registering for trials or subscribing to services. Content publishers often prioritize activities like gaining subscribers to their newsletters, while lead generation sectors assess metrics like form submissions or webinar registrations. For the travel sector, conversions are typically bookings, whereas nonprofits focus on donations or recruiting volunteers. Customizing conversion definitions to match industry aims requires a comprehensive grasp of the business and the tracking of key performance indicators (KPIs) to establish pertinent goals.

An interesting article that follows a directed graph and probabilistic model approach and utilization of Markov Chains applied to conversions was generously provided to me by Max Woelfle. However, I think it’s crucial to understand that there’s no universal solution for everyone. You’ll have to tailor your conversion metrics according to your specific industry and business model.

Visualizing the Modified TIPR Model for SEO

Explore the link model provided below:

The second intuition, after figuring out that this is a directed cyclic graph, is to develop a weighted function that will take the following parameters:

1. Universal PageRank

2. Universal CheiRank

3. Conversions

4. Crawl logs data

Please be aware that our current discussion only delves into the link graph aspect, and we haven’t addressed the semantic aspect yet.

Also, feel free to choose different metrics that are specifically relevant for your industry. For example, indexability and crawl efficacy are very important for news platforms and metrics that I would play with in my weighted formula.

It’s important to note that the metrics you choose should “meet certain characteristics. They must be measurable in the short term (experiment duration), computable, and sufficiently sensitive and timely to be useful for experimentation. Suppose you use multiple metrics to measure success for an experiment. Ideally, you may want to combine them into an Overall Evaluation Criterion (OEC), which is believed to impact long-term objectives causally. It often requires multiple iterations to adjust and refine the OEC, but as the quotation above, by Eliyahu Goldratt, highlights, it provides a clear alignment mechanism to the organization.”

I took this definition from Ron Kohavi’s book on “Trustworthy Online Controlled Experiments: A Practical Guide to A/B Testing”. The section “Metrics for Experimentation and the Overall Evaluation Criterion” should be particularly interesting for SEOs because it demonstrates a scientific approach to choosing your metrics wisely. Short enough that you can test them and impactful enough that they will drive long-term business growth. Once you develop your weighted formula, assign weights to each metric and tweak them accordingly based on testing and observations. Here’s an example weight function below:

It’s important to note that the total sum of the weights should be equal to one.

You need to apply this weight formula to each node to determine its value:

A Debate and An Open Question on Picking Independent Variables in the Weight Formula

In addition to the previously mentioned criteria, it’s advisable to opt for independent variables that aren’t interdependent or mutually influential to avoid bias in logical modeling and prevent arriving at inaccurate conclusions. When variables are correlated, they can significantly impact the outcome. For instance, consider the relationship between crawlability (or, as Kevin highlighted, server log files) and conversions. Given the lack of transparency in Google’s algorithms, some assumptions need to be made based on informed judgment:

- Google indexes a page because it perceives value in its content. Conversions could influence this perceived value since the page effectively fulfills users’ commercial intent.

- Simultaneously, we consider conversions to emphasize the page’s importance for the same commercial intent. However, the initial measurement might result from the latter, potentially deviating from the intended objective.

Think about picking valuable, independent variables for a second and take this with you as a home assignment.

The third intuition is to identify super nodes in the graph.

I would not suggest any specific algorithm, but I find the following scientific paper particularly interesting: “SuperNoder: a tool to discover over-represented modular structures in networks.”

The fourth intuition is to use the supernoder-alike solution to identify super-nodes and reorganize them: you can “break them” or link from them to reorganize the graph neighborhood.

The final intuition is to use Eigenvector Centrality, an algorithm that measures the transitive influence of nodes.

“Relationships originating from high-scoring nodes contribute more to the

score of a node than connections from low-scoring nodes. A high eigenvector

score means a node is connected to many high-score nodes”. Super-nodes are connected to many vital nodes based on the defined weight formula.

There are different centrality measures available besides Eigenvector Centrality that might be more suitable for your needs. In my case, Eigenvector Centrality works best because it emphasizes the influence of the nodes or links. However, there are several other options to consider:

- Degree centrality: This depends on the number of connections coming in (In-Degree) and going out (Out-Degree) from a node. In-degree refers to the number of incoming connections, while out-degree refers to the number of outgoing connections to other nodes.

- Closeness centrality: This measures a node’s importance by how close it is to all other nodes in a graph.

- Betweenness centrality: This assesses a node’s significance based on how often it falls along the shortest paths between pairs of nodes in a graph. For instance, in the Omio’s Graph Theory SEO case, this measure might be crucial.

- There are more complex centrality measures available, but I’ve provided an overview of the main options. For a deeper understanding, you can refer to the article “Graph Analytics — Introduction and Concepts of Centrality”.

Should I Perform Automatic, Semi-automatic or Manual Graph Link Restructuring?

After you’ve finished crunching the numbers and identified those super-nodes, I highly suggest employing hierarchical clustering or automatic semantic linking to restructure the graph. Wondering why?

While I understand that automating internal linking can pose challenges in specific situations, it’s an undeniable truth that well-crafted topical maps offer flexibility, all while efficiently managing automated data pipelines and DevOps workflows behind the scenes.

Hierarchical clustering provides us with the opportunity to hierarchically organize link nodes, based on their semantic closeness (reference: “Hierarchical Cluster Analysis” by UC Business Analytics R Programming Guide).

However, utilizing custom embeddings can be even more effective in capturing the unique specifics of the content. Is relying solely on a link-graph-engineered approach truly the way to go, or should we consider alternatives to avoid a one-size-fits-all solution?

Here, I won’t delve into the intricate details of explaining semantic similarity, vector logic, and sentence transformers. You have access to my academic research conducted a few years ago, titled “Content Engineering for State-of-the-art SEO Digital Strategies by Using NLP and ML,” which covers these concepts comprehensively.

It’s worth noting the following: having worked extensively as a technical writer and SEO analyst for several years, I’ve recognized a critical content creation and differentiation issue. As content creators, we sometimes need to allocate more time to craft unique content that stands apart from our previous works. As a result, many seemingly unrelated articles can end up in the same topic cluster. This can make hierarchical clustering a questionable solution. However, I still find using semantic similarity as a definitive solution to this problem though. If you’re only interested in semantic contextuality and looking for a quick, practical solution, try Lee Foot’s BERTlinker tool. It’s a great tool I’ve used on several occasions (thank you, Lee, for everything you do for our SEO community). It was featured as an App of the Month by Streamlit. What an achievement!

How Should I Optimize my Link Graph After Identifying Super-nodes for SEO?

To avoid risking quick-link-graph-change penalties, you can choose an intelligent approach to connect the pages between themselves. This approach allows SEOs to focus on their work and be mindful about strategy, instead of choosing which sections of pages to link to manually. In theory, we could take a semi-automated route by inserting automated HTML sitemaps as suggested links in the HTML sitemap section and then giving them a manual once-over. However, the downside of this approach is that it pulls your attention away from the crucial aspects. You end up knee-deep in technical tasks and manual labor, which, let’s face it, can eat up all your time.

Finally, based on my hands-on experience, I’ve found that a completely automated, intelligent solution that empowers SEO professionals to fine-tune the overall parameters and devise strategies is my preferred choice.

The Interplay Between a Link Graph and a Knowledge Graph Optimization

On one side, we’ve got the link graph, and on the flip side, there’s the knowledge graph. What defines our work is the interaction between these two elements. Once we’ve assessed the parameters and assigned weights, we turn to semantic recommendations to ensure coherence in our content. Without an ontology (or at least a robust taxonomy), there’s a risk of creating isolated sections and missing out on a more naturally engaging experience.

Let’s say you lack a structured framework – you might unintentionally segregate content. We can, for instance, connect different clusters of pages with links simply because they cater to the same audience, regardless of where they stand in the link graph. This highlights why semantics play a crucial role.

While many in large publications or e-commerce focus solely on the linked graph, our attention is on both links and semantics. Semantic understanding allows us, especially in industries like fashion, to view content in terms of outfits, following a sequence (often from top to bottom and then accessorizing). This philosophy lies at the heart of our approach: the specifics are site-dependent and related to the overall taxonomy, but the essence remains consistent.

What Challenges Are Presented by This Approach and Algorithmic Framework?

That’s a fantastic question. Firstly, I want to express my gratitude to you for reading the article and everyone who took part in our collaborative brainstorming session on Twitter and contributed to the discussion either in the thread or via DMs.

I’d like to extend my gratitude to Petar Tonkovic, ex-CERN, and Ariola Lami from the Technical University of Munich for their invaluable reviews of the initial drafts.

There are instances where you might encounter a high volume of bad-quality pages containing numerous links. This situation can result in an excessive consumption of crawl budget and a mismatch with user intentions, ultimately failing to drive conversions. Consequently, these pages may inaccurately inflate their importance in Google’s assessment.

It’s essential to address these issues promptly. Using a quality crawler, like Botify, can be immensely helpful in segmenting data at a folder or cluster level, allowing you to identify areas where you can optimize your SEO efforts. I highly recommend checking out Oncrawl’s excellent case study on this topic for further insights.

How Does WordLift Solve Link Graph Restructuring and Internal Linking Architectural Problems?

We deploy human-focused, yet business-backed, personalized approaches for our clients which helped us develop our innovative dynamic internal links solution (thus the name WebKnoGraph framework). In most cases, we’re helping serious SEO e-commerce players and SEO-ready companies who are ready to embrace the power of ethical generative AI with a human-in-the-loop (HITL) approach.

Reviewers

We’d like to extend our gratitude to the editor and reviewers for their insightful comments. Their feedback has been instrumental in enhancing the quality of our research significantly. Cheers to a more knowledgeable SEO community!

- Technical reviewers:

- Petar Tonkovic: Data Engineering, Graphs and Research

- Ariola Lami: Full-Stack Software Engineering, A&B testing and Digital Analytics

- Andrea Volpini: CEO of WordLift

- SEO & Content reviewers:

- Max Woelfle: SEO, Digital Marketing and Content

- Stefan Stanković: SEO, Web Analytics and Content

- Sara Moccand-Sayegh: SEO, Community Building and Content