Google Voice Search Button is Dead, long live the Google Assistant!

It’s been subtle and first reported by the Android Police a few hours ago: Android devices used to have a microphone button on the home screen widget along with the same button on the Google app itself to trigger a voice search and a list of supported commands (i.e. making phone calls, playing music and texting friends). This button is now being replaced by the Google Assistant and by the Actions supported by the Google Assistant app.

Why is the switch from Voice Search to the Google Assistant so important?

The introduction of Google Assistant shows us how Google is moving toward an “AI-first” user experience and it is getting closer to achieving the true underlying potential of its large semantic network that consistently changes the way we search and consume information. We are looking at the surface of this changes when for instance the Zero-Result SERP was first introduced.

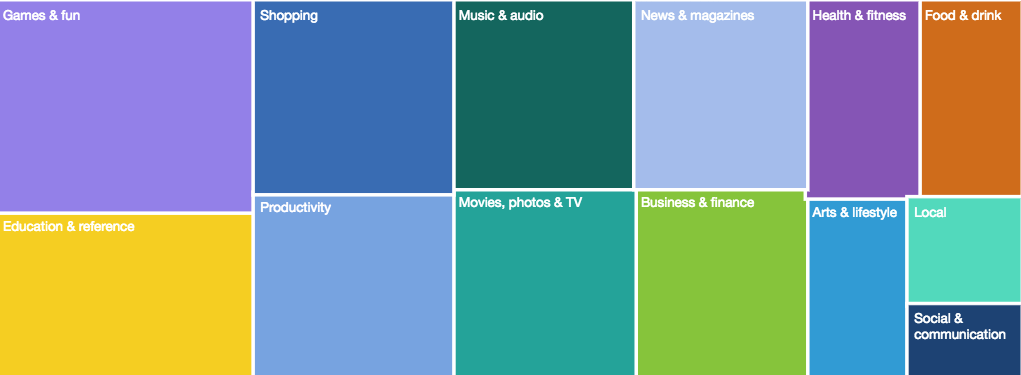

The red microphone button that previously triggered a simple voice search can now – with the Google Assistant – help us accomplish a lot more tasks including actions implemented in third-party applications that offer services in a variety of different sectors. We recently analyzed the new directory that Google created to help users discover third-party Assistant actions and here is a breakdown of the top categories by the number of intents.

How did this happen?

On the latest Android devices running the Google Assistant, the Voice Search was causing an inconsistent user experience and it was hard to grasp why one voice search was popping up from the bottom of the screen why another one was coming from the top.

How can I optimize content for the Google Assistant?

Online publishers can make their content ready for the Google Assistant by adding support for AMP and the adequate structured data markup. The first types of structured content – for US publishers only – that the Google Assistant is able to pick up include Podcast, News, and Recipes. Moreover, publishers can create their own Google Action (read more about it in this article on how to create your own Action or contact us to learn more).

It’s important to move forward in this direction as we are starting to see divergent results from traditional Search and the Google Assistant.

Let me show you an example – the query “What is Semantic SEO?” when triggered from Chrome (Voice Search) will trigger a featured snippet generated from the website online-sales-marketing.com but, when the same question is asked to the Google Assistant, (or to a Google Home or Google Mini device) the response is scraped from the wordlift.io website.

There is more, if you create your own Google Action, the Google Assistant might suggest your Action to the end-user for answering a specific question (you can find out more on the WooRank’s website to optimize your Google Action for increasing its reach).

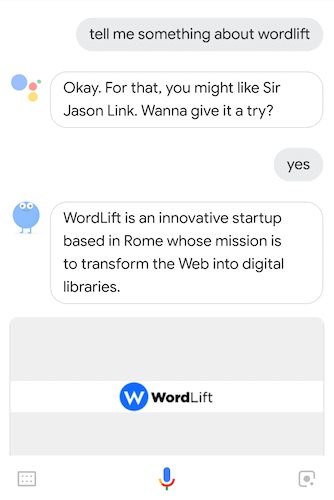

See an example below where our Google Action is invoked to provide information about WordLift.

Ready to optimize your content for the Google Assistant? Book a demo!