AI Visibility Isn’t Enough: Why Execution Infrastructure Wins

Discover why dashboards don’t move the needle in AI search and how semantic infrastructure and knowledge graphs power scalable, AI-ready growth.

Brands are asking the wrong question.

Most executives still wonder: “How do we appear in ChatGPT?” — as if AI visibility were simply a measurement challenge.

But visibility is not the problem.

The real question is: How do we build the infrastructure AI systems can reason over?

This article builds on insights from The Alpha Is Not LLM Monitoring, an analysis originally conducted by Kevin Indig, WordLift, Primo Capital, and G2, expanding the findings into a strategic perspective designed specifically for business leaders.

The Dashboard Trap

When generative AI exploded into mainstream search, a predictable pattern emerged: dozens of startups launched to track how brands appear in AI-generated answers. The pitch was compelling—CMOs finally got visibility into this new channel.

But visibility into what, exactly?

Monitoring dashboards surface information. They tell you that ChatGPT mentioned your competitor in a product recommendation, or that Perplexity cited your blog post in an answer. This is a useful context. It is not operational.

The uncomfortable reality is that tracking tools have near-zero switching costs. If you cancel your AI visibility dashboard tomorrow, you lose historical charts. Your content operations continue unchanged.

Now consider what happens when semantic infrastructure disappears from your stack. The structured data powering your rich snippets goes dark. The entity relationships connecting your content to Google’s Knowledge Graph break. The machine-readable context that helps AI systems understand who you are, what you sell, and why you matter—gone.

One is a report. The other is infrastructure.

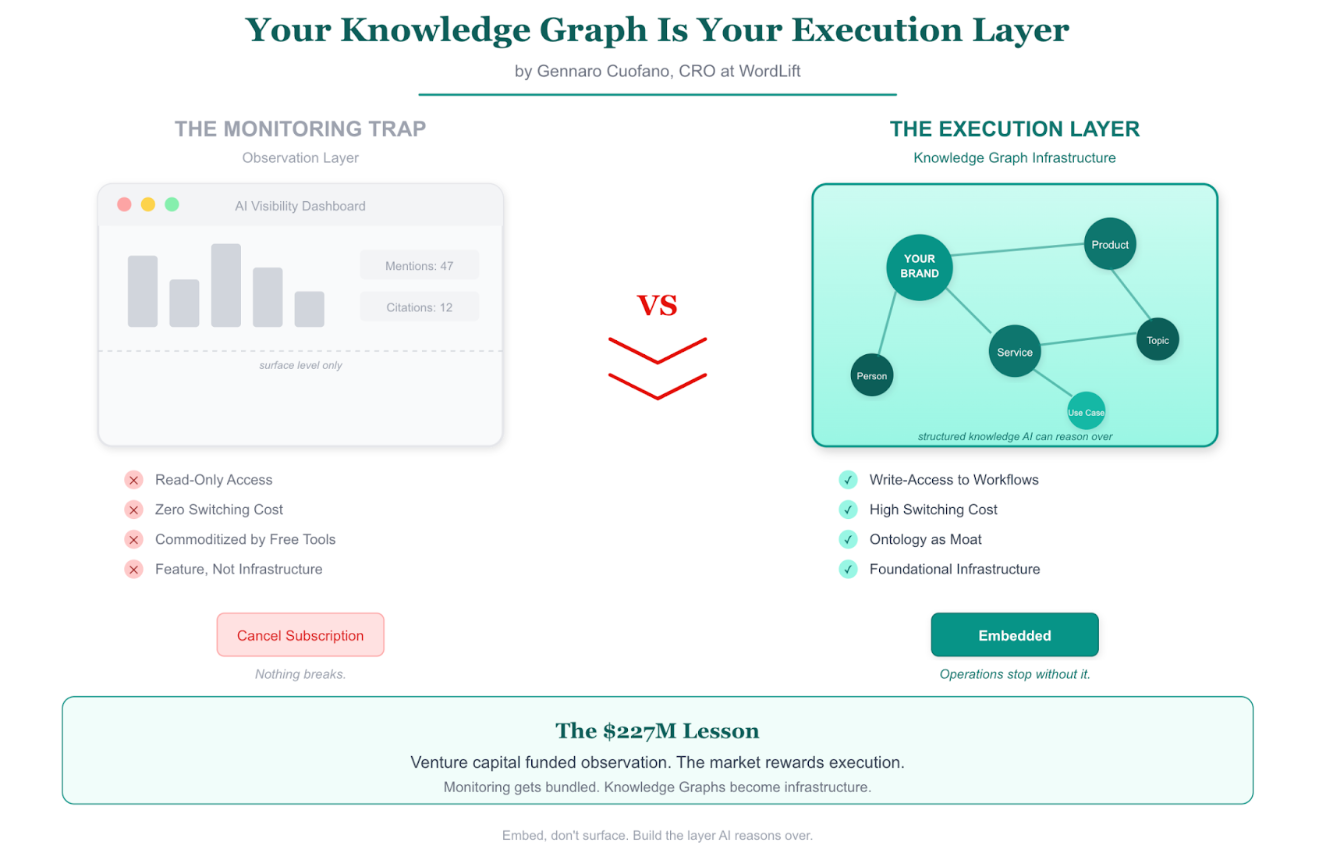

This visual highlights the core difference: AI monitoring tools surface observations, but knowledge graph infrastructure shapes outcomes. One is easily replaced; the other becomes operationally indispensable.

Why Ontology Became the Moat

The Semantic Web vision that Tim Berners-Lee articulated twenty-five years ago is finally arriving—just not in the form most people expected.

The original promise was that machines would understand web content the way humans do. Structured data would make meaning explicit. Ontologies would enable automated reasoning across distributed systems.

What actually happened: Large Language Models learned to reason over unstructured text with remarkable fluency. They don’t need perfectly structured data to understand your website.

But here’s what they do need: context.

When an AI agent tries to answer a complex query—”What’s the best enterprise CRM for a manufacturing company with distributed sales teams?”—it’s not just pattern-matching against training data. It’s synthesizing information from multiple sources, weighing relevance, and constructing an answer that serves the user’s actual intent.

The organizations that win this game aren’t the ones tracking where they appear in AI answers. They’re the ones providing the structured context that shapes those answers in the first place.

This is why knowledge graphs matter more than monitoring dashboards. Your ontology—the explicit representation of your entities, their properties, and their relationships—becomes the substrate AI systems reason over.

Generic optimization patterns can’t replicate this. A monitoring tool can tell you that you’re underrepresented in AI responses about your product category. It cannot tell the AI system what your products actually do, how they differ from competitors, or why they matter for specific use cases.

That requires structured knowledge. It requires entities with properties. It requires relationships that encode meaning.

From Read-Only to Write-Access

The established SEO platforms—Semrush, Ahrefs, Moz—built their businesses on indexing the web. They crawl, they analyze, they report. This is read-only access at scale.

Agentic SEO requires something different: write-access to operational workflows.

The shift looks like this:

Read-only (monitoring): Track keyword rankings across AI platforms. Report on brand mentions in LLM responses. Benchmark visibility against competitors.

Write-access (execution): Generate structured data that shapes AI responses. Automate content distribution across channels where AI systems source information. Maintain knowledge graph infrastructure that encodes your brand’s meaning in machine-readable format.

The read-only layer is commoditizing rapidly. When Amplitude offers AI visibility tracking for free, when Semrush adds it as a checkbox feature, the standalone category starts collapsing.

The write-access layer is where defensibility lives. Operational workflows that embed in your content infrastructure don’t get turned off casually. Semantic infrastructure that powers your entire digital presence becomes foundational.

This is why the companies building execution capability raised less venture capital than monitoring startups—and why that capital allocation may prove to be exactly backwards.

What Execution Actually Means

Execution in Agentic SEO isn’t just “shipping content faster.” It’s building the infrastructure that makes your content machine-readable, contextually grounded, and operationally scalable.

Structured knowledge as foundation. Your Knowledge Graph isn’t a nice-to-have for rich snippets. It’s the explicit representation of what your organization knows—products, services, people, locations, concepts—encoded in a format AI systems can query and reason over.

Entity-first content architecture. When you organize content around entities rather than keywords, you’re building something AI systems can navigate. The relationships between your product pages, your documentation, your case studies, your team bios—these become explorable knowledge, not isolated pages.

Automated workflow integration. The gap between “insight” and “action” is where most AI visibility efforts die. Knowing you’re underrepresented in AI responses doesn’t help if bridging that gap requires manual effort at scale. Execution platforms connect analysis to automated content operations.

Governance at scale. Generating content isn’t the hard part anymore. Maintaining consistency, accuracy, and brand voice across dozens of channels and thousands of pages—that’s the operational constraint. Systems that enforce governance while enabling scale are infrastructure, not features.

The Eighteen-Month Sorting

The AI visibility market is about to experience what happens when growth-stage capital meets feature-stage products.

Most monitoring startups raised their rounds in late 2024 and early 2025. Standard runway calculations put their next decision point in Q3 or Q4 of 2026. To justify the valuations they raised at, they’ll need to demonstrate 3-5x year-over-year revenue growth.

But the product category is commoditizing underneath them. When your core offering becomes a free feature in established platforms, the growth math stops working.

The companies that built execution infrastructure—semantic platforms, knowledge graph tools, automated content operations—raised less capital against more mature businesses. They’re not racing against runway depletion. They’re scaling against operational demand.

This is the pattern: monitoring gets bundled, execution becomes infrastructure.

Building for the Reasoning Web

The question isn’t whether AI search matters. It’s whether you’re building infrastructure for it or just observing it.

Observation scales poorly. Every incremental insight requires human interpretation and manual action. The organizations that win are the ones where AI visibility improvements compound automatically—because the infrastructure connecting insight to action is already in place.

Your Knowledge Graph is that infrastructure. It’s not a dashboard showing you where you appear in AI responses. It’s the structured representation of your organizational knowledge that AI systems draw on when constructing those responses.

The monitoring layer measures outcomes. The execution layer shapes them.

What to Evaluate in Your Current Stack

Does your semantic infrastructure encode your actual business knowledge? If your structured data is limited to basic schema markup—Organization, Product, Article—you’re providing minimal context. AI systems need rich entity relationships, detailed properties, and explicit connections to reason effectively.

Are your content operations connected to your knowledge graph? If creating new content is a manual process disconnected from your entity infrastructure, you’re not building compounding value. Execution platforms maintain bidirectional connections—content updates enrich the knowledge graph, and knowledge graph updates inform content.

Can you trace from AI visibility gaps to automated remediation? The gap between “we’re underrepresented in this topic area” and “we’ve published content addressing it” should be measurable in hours, not weeks. If that path requires manual intervention at every step, you have a monitoring tool, not an execution platform.

What happens if you turn it off? This is the real test. If removing a tool from your stack affects only your reporting, it’s a feature. If it breaks your content operations, it’s infrastructure.

The AI visibility vendors raised $227 million betting that observation is valuable. The market is now discovering that execution is what compounds.

Build the layer that embeds, not the layer that surfaces.