What does Google’s AI Mode really want from your product page — and what exactly is Chunk Optimization?

How to Optimize Your Product Pages for Google AI Mode.

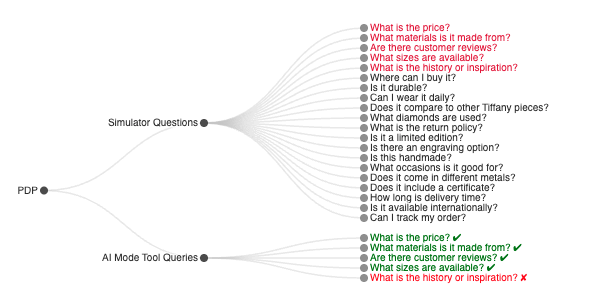

To help brands prepare for AI-driven search, I built a simulator that models how AI systems like Google AI Mode, AI Overviews, Perplexity, and ChatGPT retrieve and rank content.

AI Mode is Google’s most significant shift in the commercial search experience to date. What is Google’s AI Mode? Currently available in the U.S., it transforms Google Search into a chat-like experience powered by a generative AI agent. At its core, it combines Google’s Gemini model with real-time search capabilities to produce conversational answers—similar to what users see in Perplexity or ChatGPT Search. It’s a multi-turn, interactive experience where users can refine their queries and receive synthesized, context-aware responses directly within Search.

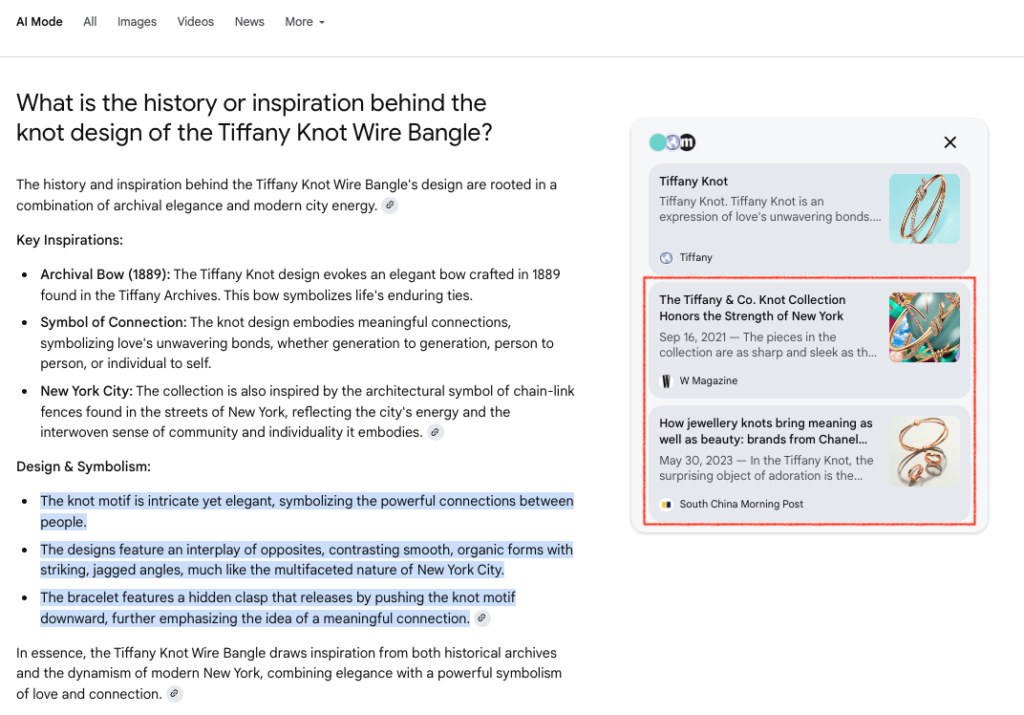

To demonstrate this, I recently ran an experiment on the product description page for the iconic Tiffany’s Knot Wire Bangle.

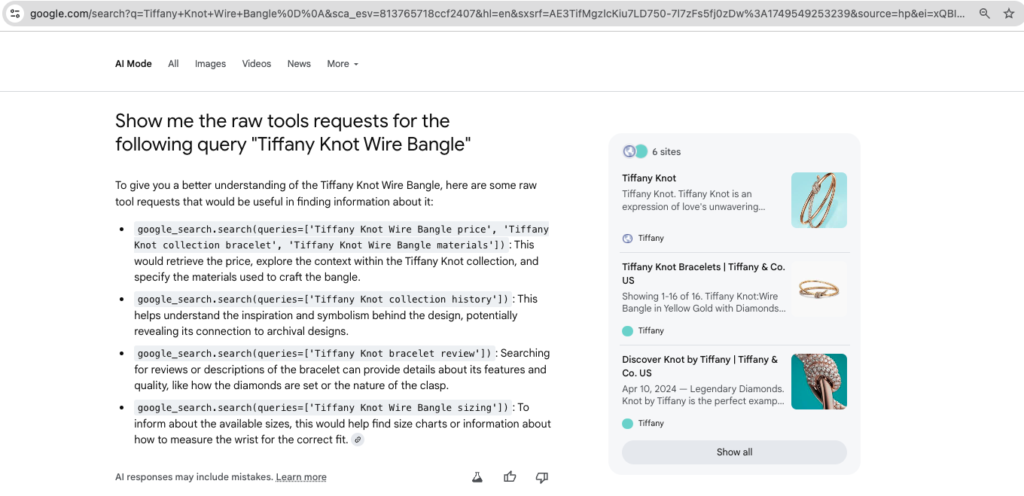

Using a meta-prompt originally developed by Jean-Christophe Chouinard, I asked to Google’s AI Mode:

“Show me the raw tools requests for the following query [product-name]”

The response included 5 key areas that supposedly are used to ground the response when a user asks information about the product:

- What is the price?

- What materials is it made from?

- Are there customer reviews?

- What sizes are available?

- What is the history or inspiration behind the knot design (Collection history)?

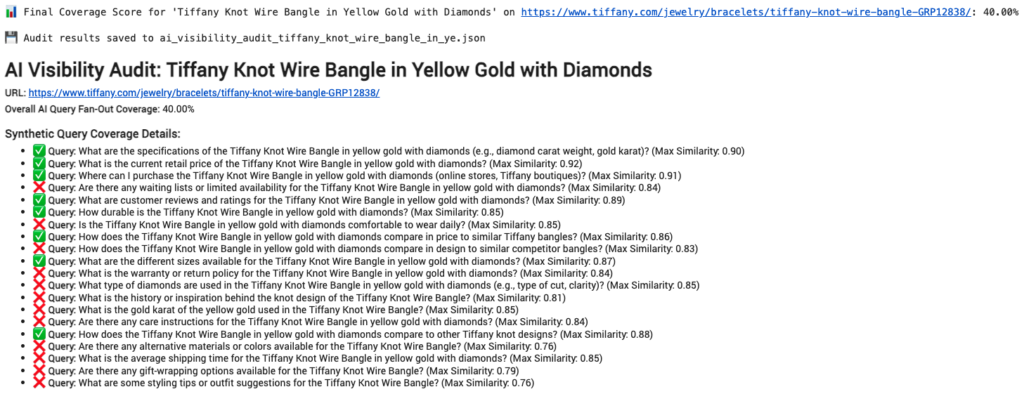

Our simulator — which casts a broader net with 20 synthetic questions — accurately predicted all 5 key areas surfaced during the audit. We intentionally look at a broader set of sub-queries because these are inherently stochastic and influenced by personal user context (as highlighted by Elizabeth Reid, VP and Head of Search, in Google’s blog).

AI Mode is evolving to incorporate even more contextual signals. Soon, it will offer suggestions informed by your search history, and users will be able to opt in to connect other Google services—starting with Gmail—to further personalize the experience.

We then compared the page content against those 5 queries using a high semantic similarity threshold of 0.80 to simulate a competitive AI retrieval environment.

The outcome:

- 4 out of 5 tool intents were well matched.

- The “history/inspiration/collection” query failed to retrieve a suitable chunk from the page.

And this is the revealing part: Google’s AI Mode filled the gap by citing a competing site when I went deeper searching for that information (entity facet).

This confirms the structural gap was not about missing content — but about missing a retrievable, semantically aligned chunk.

What is a text chunk?

A text chunk is a contiguous segment of text, typically ranging from a few sentences to a few paragraphs, that is semantically self-contained and topically coherent. In the context of information retrieval and large language models, a chunk is the atomic unit used for indexing, embedding, and retrieval.

My understanding of AI Mode is that Google is using a hybrid chunking strategy, leveraging multiple methods depending on the processing stage. Based on the configuration options available in Vertex AI Search, it’s likely that layout-aware chunking plays a central role—particularly during the ingestion phase. This approach segments content based on HTML structure, such as headings, paragraphs, lists, and tables. You can read more about it on LinkedIn for further insights.

This is where Chunk Optimization becomes critical:

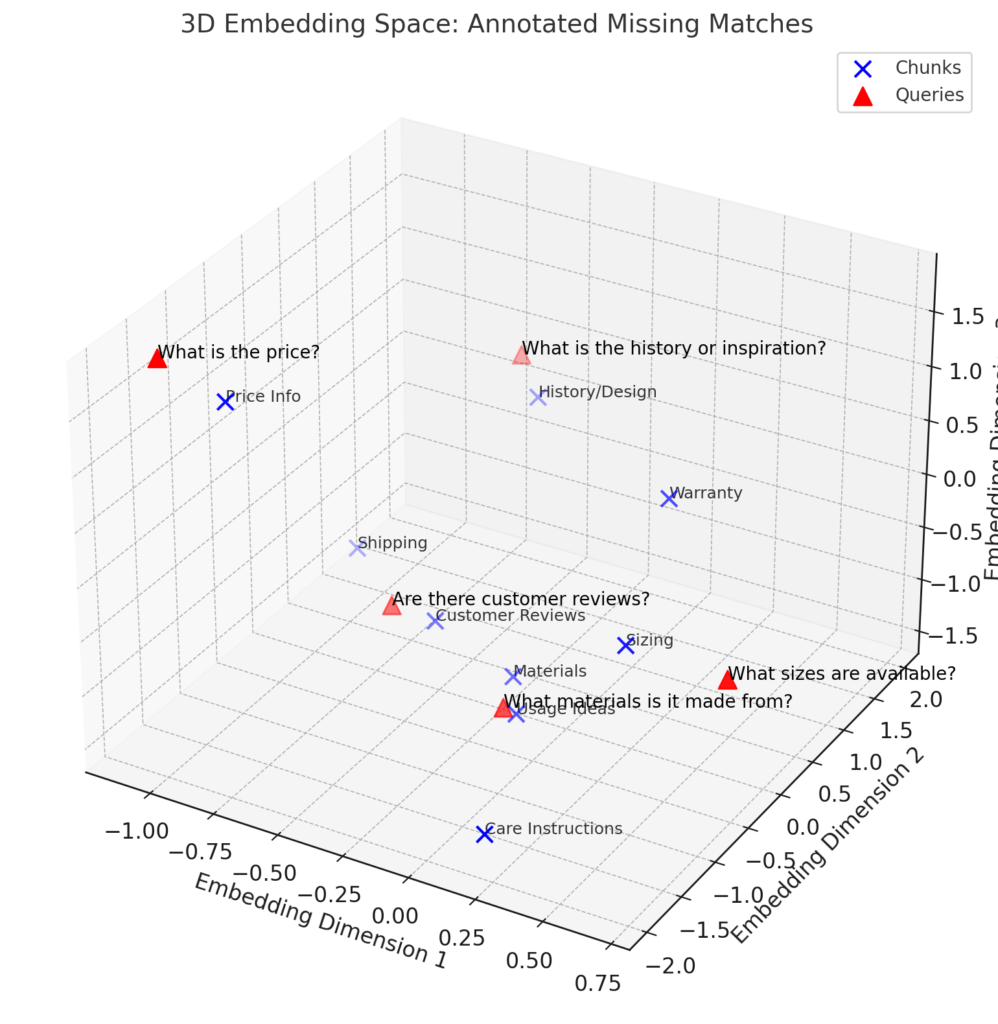

- Content must be entity-rich, self-contained, and semantically aligned with predictable queries.

- AI systems retrieve chunks, not full pages. This means your beautifully designed product page may still be invisible to AI systems if the individual sections—or chunks—aren’t optimized and self-contained.

- If the chunk isn’t optimized, your site won’t be the source — even when it should be.

At WordLift, we store multi-chunk representations for each entity — whether it’s a product or an article — inside the Knowledge Graph. This allows us to calculate similarity with incoming queries on the fly, identify weak points, and ensure content is optimized for AI-driven retrieval.

Here is a simplified visualization of sub-queries and chunks in a 3D semantic space, showing where chunks and queries align — and where they don’t.

📄 Essential Guidelines for Content Writers

Break your content into clear, skimmable chunks—ideally 150–300 words (or 200–400 tokens). Use descriptive headings, short paragraphs, and semantic elements like bullet points and tables to enhance readability. Each chunk should focus on a single entity (e.g., Tiffany Knot Bangle) or a well-defined relationship involving that entity (e.g., Tiffany Knot Bangle is made of 18k gold)—fact-based, self-contained, and semantically aligned with user intent.

Google AI Mode and similar systems don’t retrieve entire pages—they retrieve meaningful fragments. If your content isn’t chunked and semantically structured, it simply won’t surface.

This is how we prepare content for AI. And this is how you stay visible when search becomes agentic.

👉 Curious to see how your product pages perform in AI Mode?

Request a free AI Mode Chunk Audit or book a demo with our team to get started.