OpenAI Emerging Semantic Layer

The Agentic Web is here. Learn how brands can win in the ChatGPT ecosystem through conversational prompts, embedded apps, and agentic commerce. It's time to build for the semantic layer.

Latest update: by analyzing its SSE streams, we uncover how OpenAI’s web client structures entities, moderates outputs, and connects to a product graph that mirrors Google Shopping feeds. Read more about ChatGPT entity layer.

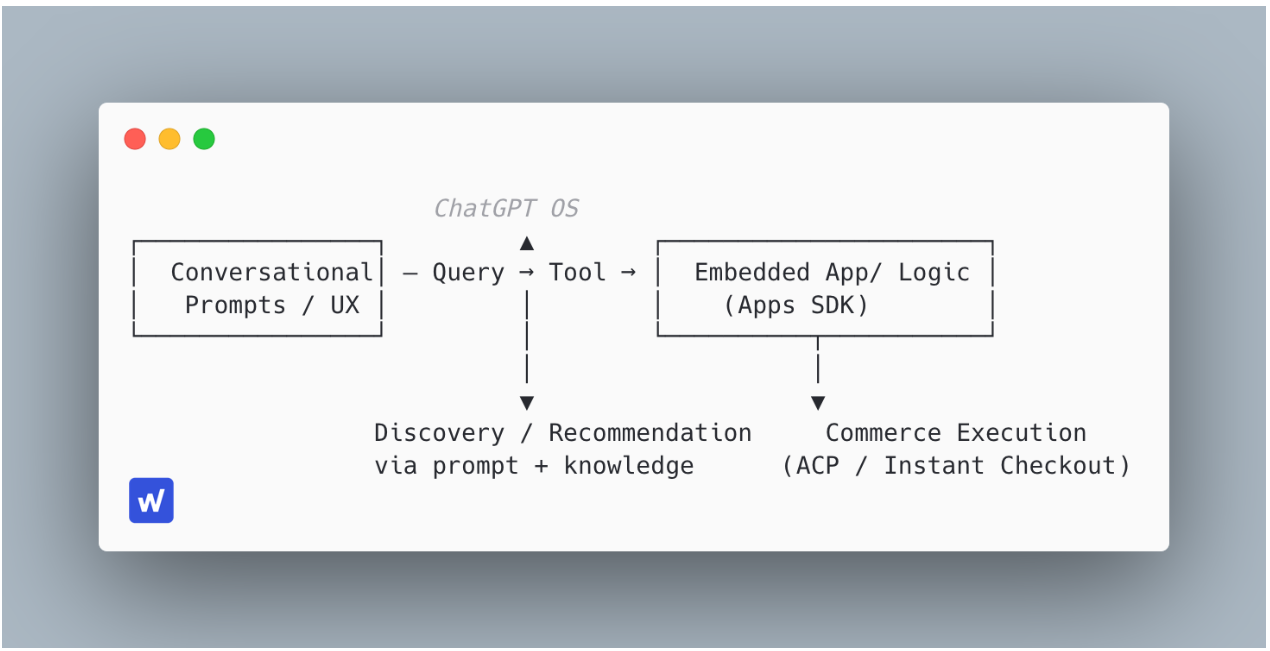

Following the announcements from OpenAI on October 6th, brands start to have real ways to operate inside ChatGPT. At a very high-level this is the map for anyone considering entering (or expanding) into the ChatGPT ecosystem:

The ChatGPT Ecosystem

- Conversational Prompts / UX: optimize how ChatGPT “asks” for or surfaces brand services. This is the realm of AEO / GEO. ChatGPT acts as a new surface for brand discovery and recommendation. Relevance depends on structured data, clarity of value, and conversational usability. Think: Brands can promote conversational intents.

- Embedded App / Logic (Apps SDK): integrate your service so ChatGPT can call your backend/actions directly inside the chat. Lets users chat directly with integrated apps like Zillow, Canva, or Booking inside ChatGPT. Think: Brands can expose functionality, not just information.

- Commerce Execution (ACP / Instant Checkout): let users complete transactions via ChatGPT without redirecting to external sites. ACP is an open standard (built with Stripe) that allows ChatGPT agents to complete purchases directly in-chat. Think: the AI can now “buy” from your brand, securely and natively. No website needed.

The Agentic Web is emerging and it’s about building for interactions inside the AI and not only about mentions and intent discovery. Win discoverability across all three layers and you win SEO 3.0.

The emerging semantic layer: not just new endpoints for brands

Lily Ray’s post called out how OpenAI’s new specs finally create an AEO surface distinct from SEO. I agree but what’s even more interesting is what’s underneath.

With the Apps SDK (built on top of the MCP) and the Agentic Commerce Protocol, we’re watching a semantic layer take shape: a structured, interoperable way for data, intent, and brand logic to be read and acted on by AI systems.

It’s no longer simply about visibility or tracking, it’s about legibility. If your brand’s data, offers, and logic aren’t defined semantically, the AI can’t “use” you no matter how good your marketing is.

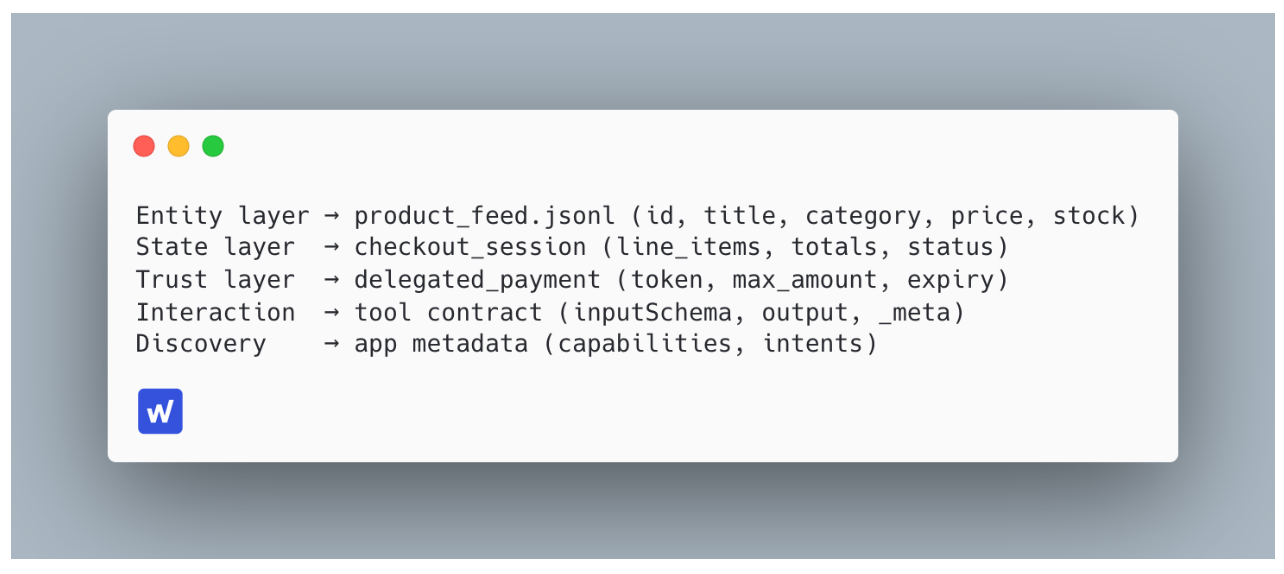

Under the surface, these specs define a shared taxonomy for how AI agents interpret the world. Let’s review it:

ChatGPT emerging semantic taxonomy

Together, that’s the scaffolding of an AI-readable economy, this is a system where brands aren’t indexed, they’re invoked.

Most brands are still optimizing surface content. Most brands are still figuring out if they need Profound, OtterlyAI or Scrunch. The reality is that the real bottleneck is deeper: your data shape. How legibly your catalog, logic, and fulfillment map into these schemas.

Until that’s solved, no amount of prompt engineering makes you visible to AI agents.

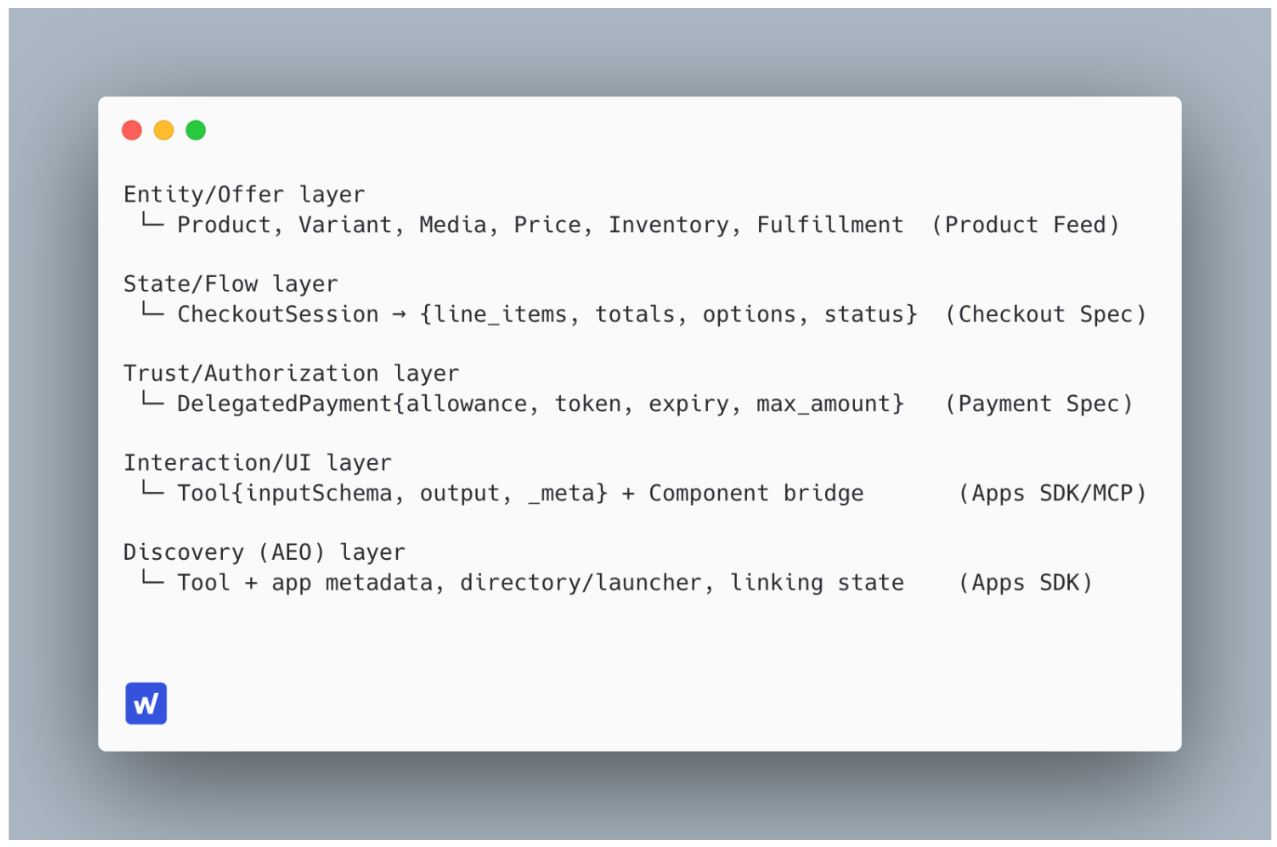

ChatGPT Semantic Layer / Taxonomy

What the specs actually encode

1) Product/Offer ontology (discovery layer).

OpenAI’s Product Feed Spec standardizes what a “thing” is: IDs, GTIN/MPN, titles/descriptions, category path, brand, media, price, taxes, inventory, variants (grouping + attributes), fulfillment, and merchant metadata including flags that control whether items are searchable vs purchasable in ChatGPT. That’s an explicit taxonomy for AI-legible discovery. Learn more about ChatGPT shopping specification.

2) Checkout state machine (transaction layer).

The Agentic Checkout Spec requires merchants to expose canonical endpoints (create/update/complete session) and to return a full, authoritative cart state on every response: line items, totals, messages, fulfillment options, status enums, currency, links, etc. It also defines headers (idempotency, signatures, API versions) and order webhooks – i.e., shared state and transitions the agent can reason over.

3) Payment delegation (trust/authorization layer).

The Delegated Payment Spec defines a one-time, constrained token (max amount + expiry) issued by the PSP and tied to a checkout_session_id, plus structured error codes and risk signals. This is a portable authorization object the agent can pass without holding card data.

4) Tool contracts + UI semantics (interaction layer).

In Apps SDK (on MCP), tools must declare an inputSchema/output and required _meta fields (e.g., “openai/outputTemplate”), and tool results are split into structuredContent (model + component), content (narration), and _meta (component-only). . This clearly separates reasoning data from rendering data.

5) Discovery/selection semantics (AEO surface).

Here is where things get interesting – apps are picked by the model using tool metadata, prior usage, context, and linking state; directory/launcher surfaces also rely on app-level metadata. This is the policy surface where “AEO” lives (how you’re selected), embracing SEO 3.0.

Conclusion

The specs are public. The standards are open. What’s missing is your data mapped to them.

Before investing in prompts, agents, LLM trackers, or search tools, fix the foundation: product feed, state logic, and tool contracts.

→ Build for the semantic layer — that’s where the next wave of AI discoverability begins.

That’s how brands become part of the AI’s reasoning loop, not just its search results.